This is the multi-page printable view of this section. Click here to print.

Blog

- Releases

- Release v0.8.0

- Release v0.7.0

- Release v0.6.0

- Release v0.5.0

- Release v0.4.0

- Release v0.3.0

- Release v0.2.0

- Release v0.1.0

- Deploy Trustee in Kubernetes

- How Switchboard Oracles Leverage Confidential Containers for Next-Generation Web3 Security

- Confidential Containers(CoCo) and Supply-chain Levels for Software Artifacts (SLSA)

- Confidential Containers without confidential hardware

- Policing a Sandbox

- Memory Protection for AI ML Model Inferencing

- Building Trust into OS images for Confidential Containers

- Introduction to Confidential Containers (CoCo)

Releases

Release v0.8.0

Please see the quickstart guide for details on how to try out Confidential Containers.

Please refer to our Acronyms and Glossary pages for a definition of the acronyms used in this document.

What’s new

- Upstream containerd supported by all deployment types except enclave-cc.

- This release includes the Nydus snapshotter (for the first time) to support upstream containerd.

- In this release images are still pulled inside the guest.

- Nydus snapshotter requires the following annotation for each pod

io.containerd.cri.runtime-handler: <runtime-class>. - Support for Nydus snapshotter in peer pods is still experimental. To avoid using it with peer pods do not set above annotation.

- Nydus snapshotter support in general is still evolving. See limitations section below for details.

- A new component, the Confidential Data Hub (CDH) is now deployed inside the guest.

- CDH is an evolution of the Attestation Agent that supports advanced features.

- CDH supports sealed Kubernetes secrets which are managed by the control plane, but securely unwrapped inside the enclave.

- CDH supports connections to both KBS and KMS.

- New architecture of Attestation Agent and CDH allows a client to deploy multiple KBSes.

- One KBS can be used for validating evidence with the Attestation Service while another can provide resources.

- Pulling from an authenticated registry now requires

imagePullSecrets.

Peer Pods

peerpod-ctltool has been expanded.- Can check and clean old peer pod objects

- Adds SSH authentication support to libvirt provider

- Supports IBM cloud

- Support for secure key release at runtime and image decryption via remote attestation on AKS

- Added AMD SEV and IBM s390x support for the libvirt provider

- Container registry authentication now bootstrapped from user data.

- Enabled public IP usage for pod VM on AWS and PowerVS providers

- webhook: added IBM ppc64le platform support

- Support adding custom tags to PodVM instances

- Switched to launching CVM by default on AWS and Azure providers

- Added rollingUpdate strategy in cloud-api-adaptor DaemonSet

- Disabled secure boot by default

Hardware Support

Confidential Containers is tested with attestation on the following platforms:

- Intel TDX

- AMD SEV(-ES)

- Intel SGX

The following platforms are untested or partially supported:

- IBM Secure Execution (SE) on IBM zSystems (s390x) running LinuxONE

- AMD SEV-SNP

- ARM CCA

Limitations

The following are known limitations of this release:

- Nydus snapshotter support is not mature.

- Nydus snapshot sometimes conflicts with existing node configuration.

- You may need to remove existing container images/snapshots before installing Nydus snapshotter.

- Nydus snapshotter may not support pulling one image with multiple runtime handler annotations even across different pods.

- Host pulling with Nydus snapshotter is not yet enabled.

- Nydus snapshotter is not supported with enclave-cc.

- Pulling container images inside guest may have negative performance implications including greater resource usage and slower startup.

criosupport is still evolving.- Platform support is rapidly changing

- Image signature validation with AMD SEV-ES is not covered by CI.

- SELinux is not supported on the host and must be set to permissive if in use.

- The generic KBS does not yet supported all platforms.

- The format of encrypted container images is still subject to change

- The ocicrypt container image format itself may still change

- The tools to generate images are not in their final form

- The image format itself is subject to change in upcoming releases

- Not all image repositories support encrypted container images. Complete integration with Kubernetes is still in progress.

- OpenShift support is not yet complete.

- Existing APIs do not fully support the CoCo security and threat model. More info

- Some commands accessing confidential data, such as

kubectl exec, may either fail to work, or incorrectly expose information to the host

- The CoCo community aspires to adopting open source security best practices, but not all practices are adopted yet.

- We track our status with the OpenSSF Best Practices Badge, which improved to 69% at the time of this release.

- Vulnerability reporting mechanisms still need to be created. Public github issues are still appropriate for this release until private reporting is established.

- Container metadata such as environment variables are not measured.

- Kata Agent does not validate mount requests. A malicious host might be able to mount a shared filesystem into the PodVM.

CVE Fixes

None

Release v0.7.0

Please see the quickstart guide for details on how to try out Confidential Containers.

Please refer to our Acronyms and Glossary pages for a definition of the acronyms used in this document.

What’s new

- Flexible instance types/profiles support for peer-pods

- Ability to use CSI Persistent Volume with peer-pods on Azure and IBM Cloud

- EAA-KBC/Verdictd support removed from enclave-cc

- Baremetal SNP without attestation available via operator

- Guest components (

attestation-agent,image-rsandocicrypt-rs) merged into one repository - Documentation and community repositories merged together

Hardware Support

Confidential Containers is tested with attestation on the following platforms:

- Intel TDX

- AMD SEV(-ES)

- Intel SGX

The following platforms are untested or partially supported:

- IBM Secure Execution (SE) on IBM zSystems (s390x) running LinuxONE

- AMD SEV-SNP

The following platforms are in development:

- ARM CCA

Limitations

The following are known limitations of this release:

- Platform support is rapidly changing

- Image signature validation with AMD SEV-ES is not covered by CI.

- SELinux is not supported on the host and must be set to permissive if in use.

- The generic KBS does not yet supported all platforms.

- The format of encrypted container images is still subject to change

- The ocicrypt container image format itself may still change

- The tools to generate images are not in their final form

- The image format itself is subject to change in upcoming releases

- Not all image repositories support encrypted container images.

- CoCo currently requires a custom build of

containerd, which is installed by the operator.- Codepath for pulling images will change significantly in future releases.

criois only supported withcloud-api-adaptor.

- Complete integration with Kubernetes is still in progress.

- OpenShift support is not yet complete.

- Existing APIs do not fully support the CoCo security and threat model. More info

- Some commands accessing confidential data, such as

kubectl exec, may either fail to work, or incorrectly expose information to the host - Container images must be downloaded separately (inside guest) for each pod. More info

- The CoCo community aspires to adopting open source security best practices, but not all practices are adopted yet.

- We track our status with the OpenSSF Best Practices Badge, which remained at 64% at the time of this release.

- Vulnerability reporting mechanisms still need to be created. Public github issues are still appropriate for this release until private reporting is established.

CVE Fixes

None

Release v0.6.0

Please see the quickstart guide for details on how to try out Confidential Containers.

Please refer to our Acronyms and Glossary pages for a definition of the acronyms used in this document.

What’s new

- Support for attesting pod VMs with Azure vTPMs on SEV-SNP

- Support for using Project Amber as an attestation service

- Support for Cosign signature validation with s390x

- Pulling guest images with many layers can no longer cause guest CPU starvation.

- Attestation Service upgraded to avoid several security issues in Go packages.

- CC-KBC & KBS support with SGX attester/verifier for Occlum and CI for enclave-cc

Hardware Support

Confidential Containers is tested with attestation on the following platforms:

- Intel TDX

- AMD SEV(-ES)

- Intel SGX

The following platforms are untested or partially supported:

- IBM Secure Execution (SE) on IBM zSystems (s390x) running LinuxONE

- AMD SEV-SNP

The following platforms are in development:

- ARM CCA

Limitations

The following are known limitations of this release:

- Platform support is rapidly changing

- Image signature validation with AMD SEV-ES is not covered by CI.

- SELinux is not supported on the host and must be set to permissive if in use.

- The generic KBS does not yet supported all platforms.

- The format of encrypted container images is still subject to change

- The ocicrypt container image format itself may still change

- The tools to generate images are not in their final form

- The image format itself is subject to change in upcoming releases

- Not all image repositories support encrypted container images.

- CoCo currently requires a custom build of

containerd, which is installed by the operator.- Codepath for pulling images will change significantly in future releases.

criois only supported withcloud-api-adaptor.

- Complete integration with Kubernetes is still in progress.

- OpenShift support is not yet complete.

- Existing APIs do not fully support the CoCo security and threat model. More info

- Some commands accessing confidential data, such as

kubectl exec, may either fail to work, or incorrectly expose information to the host - Container images must be downloaded separately (inside guest) for each pod. More info

- The CoCo community aspires to adopting open source security best practices, but not all practices are adopted yet.

- We track our status with the OpenSSF Best Practices Badge, which remained at 64% at the time of this release.

- Vulnerability reporting mechanisms still need to be created. Public github issues are still appropriate for this release until private reporting is established.

CVE Fixes

None

Release v0.5.0

This release includes breaking changes to the format of encrypted images. See below for more details. Images that were encrypted using tooling from previous releases will fail with this release. The process for validating signed images is also slightly different.

Please see the quickstart guide for details on how to try out Confidential Containers.

Please refer to our Acronyms and Glossary pages for a definition of the acronyms used in this document.

What’s new

-

Process-based isolation is now fully supported with SGX hardware added to enclave-cc CI

-

Remote hypervisor support added to the CoCo operator, which helps to enable creating containers as ‘peer pods’, either locally, or on Cloud Service Provider Infrastructure. See README for more information and installation instructions.

-

KBS Resource URI Scheme is published to identify all confidential resources.

-

Different KBCs now share image encryption format allowing for interchangeable use.

-

Generic Key Broker System (KBS) is now supported. This includes the KBS itself, which relies on the Attestation Service (AS) for attestation evidence verification. Reference Values are provided to the

ASby the Reference Value Provider Service (RVPS). Currently only TDX and a sample mode are supported with generic KBS. Other platforms are in development. -

SEV configuration can be set with annotations.

-

SEV-ES is now tested in the CI.

-

Some developmental SEV-SNP components can be manually enabled to test SNP containers without attestation.

Hardware Support

Confidential Containers is tested with attestation on the following platforms:

- Intel TDX

- AMD SEV(-ES)

- Intel SGX

The following platforms are untested or partially supported:

- IBM Secure Execution (SE) on IBM zSystems (s390x) running LinuxONE

The following platforms are in development:

- AMD SEV-SNP

Limitations

The following are known limitations of this release:

- Platform support is currently limited, and rapidly changing

- Image signature validation with AMD SEV-ES is not covered by CI.

- s390x does not support cosign signature validation

- SELinux is not supported on the host and must be set to permissive if in use.

- Attestation and key brokering support varies by platform.

- The generic KBS is only supported on TDX. Other platforms have different solutions.

- The format of encrypted container images is still subject to change

- The ocicrypt container image format itself may still change

- The tools to generate images are not in their final form

- The image format itself is subject to change in upcoming releases

- Image repository support for encrypted images is unequal

- CoCo currently requires a custom build of

containerd- The CoCo operator will deploy the correct version of

containerdfor you - Changes are required to delegate

PullImageto the agent in the virtual machine - The required changes are not part of the vanilla

containerd - The final form of the required changes in

containerdis expected to be different criois not supported

- The CoCo operator will deploy the correct version of

- CoCo is not fully integrated with the orchestration ecosystem (Kubernetes, OpenShift)

- OpenShift support is not yet complete.

- Existing APIs do not fully support the CoCo security and threat model. More info

- Some commands accessing confidential data, such as

kubectl exec, may either fail to work, or incorrectly expose information to the host - Container image sharing is not possible in this release

- Container images are downloaded by the guest (with encryption), not by the host

- As a result, the same image will be downloaded separately by every pod using it, not shared between pods on the same host. More info

- The CoCo community aspires to adopting open source security best practices, but not all practices are adopted yet.

- We track our status with the OpenSSF Best Practices Badge, which increased from 49% to 64% at the time of this release.

- All CoCo repositories now have automated tests, including linting, incorporated into CI.

- Vulnerability reporting mechanisms still need to be created. Public github issues are still appropriate for this release until private reporting is established.

CVE Fixes

None

Release v0.4.0

Please see the quickstart guide for details on how to try out Confidential Containers.

Please refer to our Acronyms and Glossary pages for a definition of the acronyms used in this document.

What’s new

- This release focused on reducing technical debt. You will not observe as many new features in this release but you will be running on top of more robust code.

- Skopeo and umoci dependencies are removed with our image-rs component fully integrated

- Improved CI for SEV

- Improved container support for enclave-cc / SGX

Hardware Support

Confidential Containers is tested with attestation on the following platforms:

- Intel TDX

- AMD SEV

The following platforms are untested or partially supported:

- Intel SGX

- AMD SEV-ES

- IBM Secure Execution (SE) on IBM zSystems (s390x) running LinuxONE

The following platforms are in development:

- AMD SEV-SNP

Limitations

The following are known limitations of this release:

- Platform support is currently limited, and rapidly changing

- AMD SEV-ES is not tested in the CI.

- Image signature validation has not been tested with AMD SEV.

- s390x does not support cosign signature validation

- SELinux is not supported on the host and must be set to permissive if in use.

- Attestation and key brokering support is still under development

- The disk-based key broker client (KBC) is used for non-tee testing, but is not suitable for production, except with encrypted VM images.

- Currently, there are two key broker services (KBS) that can be used:

- simple-kbs: simple key broker service for SEV(-ES).

- Verdictd: An external project with which Attestation Agent can conduct remote attestation communication and key acquisition via EAA KBC

- The full-featured generic KBS and the corresponding KBC are still in the development stage.

- The format of encrypted container images is still subject to change

- The ocicrypt container image format itself may still change

- The tools to generate images are not in their final form

- The image format itself is subject to change in upcoming releases

- Image repository support for encrypted images is unequal

- CoCo currently requires a custom build of

containerd- The CoCo operator will deploy the correct version of

containerdfor you - Changes are required to delegate

PullImageto the agent in the virtual machine - The required changes are not part of the vanilla

containerd - The final form of the required changes in

containerdis expected to be different criois not supported

- The CoCo operator will deploy the correct version of

- CoCo is not fully integrated with the orchestration ecosystem (Kubernetes, OpenShift)

- OpenShift is a non-starter at the moment due to its dependency on CRI-O

- Existing APIs do not fully support the CoCo security and threat model. More info

- Some commands accessing confidential data, such as

kubectl exec, may either fail to work, or incorrectly expose information to the host - Container image sharing is not possible in this release

- Container images are downloaded by the guest (with encryption), not by the host

- As a result, the same image will be downloaded separately by every pod using it, not shared between pods on the same host. More info

- The CoCo community aspires to adopting open source security best practices, but not all practices are adopted yet.

- We track our status with the OpenSSF Best Practices Badge, which increased to 49% at the time of this release.

- The main gaps are in test coverage, both general and security tests.

- Vulnerability reporting mechanisms also need to be created. Public github issues are still appropriate for this release until private reporting is established.

CVE Fixes

None

Release v0.3.0

Code Freeze: January 13th, 2023

Please see the quickstart guide for details on how to try out Confidential Containers

What’s new

- Support for pulling images from authenticated container registries. See design info.

- Significantly reduced resource requirements for image pulling

- Attestation support for AMD SEV-ES

kata-qemu-tdxsupports and has been tested with Verdictd- Support for

get_resourceendpoint with SEV(-ES) - Enabled cosign signature support in enclave-cc / SGX

- SEV attestation bug fixes

- Measured rootfs now works with

kata-clh,kata-qemu,kata-clh-tdx, andkata-qemu-tdxruntime classes. - IBM zSystems / LinuxONE (s390x) enablement and CI verification on non-TEE environments

- Enhanced docs, config, CI pipeline and test coverage for enclave-cc / SGX

Hardware Support

Confidential Containers is tested with attestation on the following platforms:

- Intel TDX

- AMD SEV

The following platforms are untested or partially supported:

- Intel SGX

- AMD SEV-ES

- IBM Secure Execution (SE) on IBM zSystems & LinuxONE

The following platforms are in development:

- AMD SEV-SNP

Limitations

The following are known limitations of this release:

- Platform support is currently limited, and rapidly changing

- AMD SEV-ES is not tested in the CI.

- Image signature validation has not been tested with AMD SEV.

- s390x does not support cosign signature validation

- SELinux is not supported on the host and must be set to permissive if in use.

- Attestation and key brokering support is still under development

- The disk-based key broker client (KBC) is used for non-tee testing, but is not suitable for production, except with encrypted VM images.

- Currently, there are two KBS that can be used:

- simple-kbs: simple key broker service (KBS) for SEV(-ES).

- Verdictd: An external project with which Attestation Agent can conduct remote attestation communication and key acquisition via EAA KBC

- The full-featured generic KBS and the corresponding KBC are still in the development stage.

- For developers, other KBCs can be experimented with.

- AMD SEV must use a KBS even for unencrypted images.

- The format of encrypted container images is still subject to change

- The ocicrypt container image format itself may still change

- The tools to generate images are not in their final form

- The image format itself is subject to change in upcoming releases

- Image repository support for encrypted images is unequal

- CoCo currently requires a custom build of

containerd- The CoCo operator will deploy the correct version of

containerdfor you - Changes are required to delegate

PullImageto the agent in the virtual machine - The required changes are not part of the vanilla

containerd - The final form of the required changes in

containerdis expected to be different criois not supported

- The CoCo operator will deploy the correct version of

- CoCo is not fully integrated with the orchestration ecosystem (Kubernetes, OpenShift)

- OpenShift is a non-starter at the moment due to its dependency on CRI-O

- Existing APIs do not fully support the CoCo security and threat model. More info

- Some commands accessing confidential data, such as

kubectl exec, may either fail to work, or incorrectly expose information to the host - Container image sharing is not possible in this release

- Container images are downloaded by the guest (with encryption), not by the host

- As a result, the same image will be downloaded separately by every pod using it, not shared between pods on the same host. More info

- The CoCo community aspires to adopting open source security best practices, but not all practices are adopted yet.

- We track our status with the OpenSSF Best Practices Badge, which increased to 49% at the time of this release.

- The main gaps are in test coverage, both general and security tests.

- Vulnerability reporting mechanisms also need to be created. Public github issues are still appropriate for this release until private reporting is established.

CVE Fixes

None

Release v0.2.0

Confidential Containers has adopted a six-week release cadence. This is our first release on this schedule. This release mainly features incremental improvements to our build system and tests as well as minor features, adjustments, and cleanup.

Please see the quickstart guide for details on how to try out Confidential Containers

What’s new

- Kata CI uses existing Kata tooling to build components.

- Kata CI caches build environments for components.

- Pod VM can be launched with measured boot. See more info

- Incremental advances in signature support including verification of cosign-signed images.

- Enclave-cc added to operator, providing initial SGX support.

- KBS no longer required to use unencrypted images with SEV.

- More rigorous versioning of sub-projects

Hardware Support

Confidential Containers is tested with attestation on the following platforms:

- Intel TDX

- AMD SEV

The following platforms are untested or partially supported:

- Intel SGX

- AMD SEV-ES

- IBM Z SE

The following platforms are in development:

- AMD SEV-SNP

Limitations

The following are known limitations of this release:

- Platform support is currently limited, and rapidly changing

- s390x is not supported by the CoCo operator

- AMD SEV-ES has not been tested.

- AMD SEV does not support container image signature validation.

- s390x does not support cosign signature validation

- SELinux is not supported on the host and must be set to permissive if in use.

- Attestation and key brokering support is still under development

- The disk-based key broker client (KBC) is used for non-tee testing, but is not suitable for production, except with encrypted VM images.

- Currently, there are two KBS that can be used:

- simple-kbs: simple key broker service (KBS) for SEV(-ES).

- Verdictd: An external project with which Attestation Agent can conduct remote attestation communication and key acquisition via EAA KBC

- The full-featured generic KBS and the corresponding KBC are still in the development stage.

- For developers, other KBCs can be experimented with.

- AMD SEV must use a KBS even for unencrypted images.

- The format of encrypted container images is still subject to change

- The ocicrypt container image format itself may still change

- The tools to generate images are not in their final form

- The image format itself is subject to change in upcoming releases

- Image repository support for encrypted images is unequal

- CoCo currently requires a custom build of

containerd- The CoCo operator will deploy the correct version of

containerdfor you - Changes are required to delegate

PullImageto the agent in the virtual machine - The required changes are not part of the vanilla

containerd - The final form of the required changes in

containerdis expected to be different criois not supported

- The CoCo operator will deploy the correct version of

- CoCo is not fully integrated with the orchestration ecosystem (Kubernetes, OpenShift)

- OpenShift is a non-starter at the moment due to its dependency on CRI-O

- Existing APIs do not fully support the CoCo security and threat model. More info

- Some commands accessing confidential data, such as

kubectl exec, may either fail to work, or incorrectly expose information to the host - Container image sharing is not possible in this release

- Container images are downloaded by the guest (with encryption), not by the host

- As a result, the same image will be downloaded separately by every pod using it, not shared between pods on the same host. More info

- The CoCo community aspires to adopting open source security best practices, but not all practices are adopted yet.

- We track our status with the OpenSSF Best Practices Badge, which increased to 46% at the time of this release.

- The main gaps are in test coverage, both general and security tests.

- Vulnerability reporting mechanisms also need to be created. Public github issues are still appropriate for this release until private reporting is established.

CVE Fixes

None

Release v0.1.0

This is the first full release of Confidential Containers. The goal of this release is to provide a stable, simple, and well-documented base for the Confidential Containers project. The Confidential Containers operator is the focal point of the release. The operator allows users to install Confidential Containers on an existing Kubernetes cluster. This release also provides core Confidential Containers features, such as being able to run encrypted containers on Intel-TDX and AMD-SEV.

Please see the quickstart guide for details on how to try out Confidential Containers"

Hardware Support

Confidential Containers is tested with attestation on the following platforms:

- Intel TDX

- AMD SEV

The following platforms are untested or partially supported:

- AMD SEV-ES

- IBM Z SE

The following platforms are in development:

- Intel SGX

- AMD SEV-SNP

Limitations

The following are known limitations of this release:

- Platform support is currently limited, and rapidly changing

- S390x is not supported by the CoCo operator

- AMD SEV-ES has not been tested.

- AMD SEV does not support container image signature validation.

- Attestation and key brokering support is still under development

- The disk-based key broker client (KBC) is used when there is no HW support, but is not suitable for production (except with encrypted VM images).

- Currently, there are two KBS that can be used:

- simple-kbs: simple key broker service (KBS) for SEV(-ES).

- Verdictd: An external project with which Attestation Agent can conduct remote attestation communication and key acquisition via EAA KBC

- The full-featured generic KBS and the corresponding KBC are still in the development stage.

- For developers, other KBCs can be experimented with.

- AMD SEV must use a KBS even for unencrypted images.

- The format of encrypted container images is still subject to change

- The ocicrypt container image format itself may still change

- The tools to generate images are not in their final form

- The image format itself is subject to change in upcoming releases

- Image repository support for encrypted images is unequal

- CoCo currently requires a custom build of

containerd- The CoCo operator will deploy the correct version of

containerdfor you - Changes are required to delegate

PullImageto the agent in the virtual machine - The required changes are not part of the vanilla

containerd - The final form of the required changes in

containerdis expected to be different criois not supported

- The CoCo operator will deploy the correct version of

- CoCo is not fully integrated with the orchestration ecosystem (Kubernetes, OpenShift)

- OpenShift is a non-started at the moment due to their dependency on CRIO

- Existing APIs do not fully support the CoCo security and threat model

- Some commands accessing confidential data, such as

kubectl exec, may either fail to work, or incorrectly expose information to the host - Container image sharing is not possible in this release

- Container images are downloaded by the guest (with encryption), not by the host

- As a result, the same image will be downloaded separately by every pod using it, not shared between pods on the same host.

- The CoCo community aspires to adopting open source security best practices, but not all practices are adopted yet.

- We track our status with the OpenSSF Best Practices Badge, which was at 43% at the time of this release.

- The main gaps are in test coverage, both general and security tests.

- Vulnerability reporting mechanisms also need to be created. Public github issues are still appropriate for this release until private reporting is established.

CVE Fixes

None - This is our first release.

Deploy Trustee in Kubernetes

Introduction

In this blog, we’ll be going through the deployment of Trustee, the Key Broker Service that provides keys/secrets to clients that want to execute workloads confidentially. Trustee provides a built-in attestation service that complies to the RATS specification.

In this document, we’ll be focusing on how to deploy Trustee in Kubernetes using the Trustee operator.

It is highly recommended to refer to the Introducing Confidential Containers Trustee: Attestation Services Solution Overview and Use Cases prior to deploying it.

Definitions

First of all, let’s introduce some definitions.

In confidential computing environments, Attestation is crucial in verifying the trustworthiness of the location where you plan to run your workload.

The Attester provides Evidence, which is evaluated and appraised to decide its trustworthiness.

The Endorser is the HW manufacturer who provides an endorsement, which the verifier uses to validate the evidence received from the attester.

The reference value provider service (RVPS) is a component in the Attestation Service (AS) responsible for storing and providing reference values.

Kubernetes deployment

The following instructions are assuming a Kubernetes cluster is set up with the Operator Lifecycle Manager (OLM) running. OLM helps users install, update, and manage the lifecycle of Kubernetes native applications (Operators) and their associated services.

If we don’t have a running cluster yet, we can easily bring it up with kind. For example:

kind create cluster -n trustee

# install the olm operator

curl -sL https://github.com/operator-framework/operator-lifecycle-manager/releases/download/v0.31.0/install.sh | bash -s v0.31.0

For more information on the Operator Lifecycle Manager (OLM) and the operator installation procedure from OperatorHub.io, please consult this guide.

Subscription

A subscription, defined by a Subscription object, represents an intention to install an Operator. It is the custom resource that relates an Operator to a catalog source:

kubectl apply -f - << EOF

apiVersion: operators.coreos.com/v1alpha1

kind: Subscription

metadata:

name: my-trustee-operator

namespace: operators

spec:

channel: alpha

name: trustee-operator

source: operatorhubio-catalog

sourceNamespace: olm

EOF

Note that the Trustee operator has been already published in the operator hub catalog. Trustee operator v0.17.0 is aligned to CoCo v.0.18.0 release.

Check Trustee Operator installation

Now it is time to check if the Trustee operator has been installed properly, by running the command:

kubectl get csv -n operators

We should expect something like:

NAME DISPLAY VERSION REPLACES PHASE

trustee-operator.v0.17.0 Trustee Operator 0.17.0 trustee-operator.v0.5.0 Succeeded

Configuration

The Trustee Operator configuration requires a few steps. Some of the steps are provided as an example, but you may want to customize the examples for your real requirements.

There are 2 different profile modes, permissive (for development) and restrictive (for production).

Restrictive Mode

First of all the required TLS certificates need to be created.For a production environment, customers are typically required to provide their own certificates signed by a valid Certificate Authority (CA).

The following instructions, however, are specifically targeted for a development environment, utilizing self-signed certificates generated by the cert-manager Operator.

helm install cert-manager jetstack/cert-manager --namespace cert-manager --create-namespace --version v1.19.1 --set crds.enabled=true

Create the certificate issuer and https/token-verification certificates:

kubectl apply -f - << EOF

apiVersion: cert-manager.io/v1

kind: Issuer

metadata:

name: kbs-https

namespace: operators

spec:

selfSigned: {}

---

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: kbs-https

namespace: operators

spec:

dnsNames:

- kbs-service

secretName: trustee-tls-cert

issuerRef:

name: kbs-https

---

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: kbs-token

namespace: operators

spec:

dnsNames:

- kbs-service

secretName: trustee-token-cert

issuerRef:

name: kbs-token

privateKey:

algorithm: ECDSA

encoding: PKCS8

size: 256

EOF

TrusteeConfig CR creation:

kubectl apply -f - << EOF

apiVersion: confidentialcontainers.org/v1alpha1

kind: TrusteeConfig

metadata:

labels:

app.kubernetes.io/name: trusteeconfig

app.kubernetes.io/instance: trusteeconfig

app.kubernetes.io/part-of: trustee-operator

app.kubernetes.io/managed-by: kustomize

app.kubernetes.io/created-by: trustee-operator

name: trusteeconfig

namespace: operators

spec:

profileType: Restricted

kbsServiceType: ClusterIP

httpsSpec:

tlsSecretName: trustee-tls-cert

attestationTokenVerificationSpec:

tlsSecretName: trustee-token-cert

EOF

Permissive Mode

TrusteeConfig CR creation:apiVersion: confidentialcontainers.org/v1alpha1

kind: TrusteeConfig

metadata:

labels:

app.kubernetes.io/name: trusteeconfig

app.kubernetes.io/instance: trusteeconfig

app.kubernetes.io/part-of: trustee-operator

app.kubernetes.io/managed-by: kustomize

app.kubernetes.io/created-by: trustee-operator

name: trusteeconfig

namespace: operators

spec:

profileType: Permissive

kbsServiceType: ClusterIP

EOF

When the user creates a TrusteeConfig CR, the following objects are created automatically in the operator namespace:

trusteeconfig-kbs-config

This ConfigMap mounts the main KBS configuration file onto the trustee container filesystem. It’s boiler-plate configuration and it works out-of-the-box.

trusteeconfig-resource-policy

This ConfigMap mounts the resource-policy onto the trustee container filesystem. It can be permissive or restrictive depending on the TrusteeConfig profileType. Typically there is no need to edit the ConfigMap content, unless required for specific use cases.

trusteeconfig-attestation-policy

This ConfigMap mounts the attestation-policy onto the trustee container filesystem. Typically there is no need to edit the ConfigMap content, it works out-of-the-box.

trusteeconfig-rvps-reference-values

This ConfigMap mounts the RVPS reference values onto the trustee container filesystem. A sample list of reference values is set by default, so in production it is recommended to edit the ConfigMap and insert some measured PCR values.

trusteeconfig-tdx-config

This ConfigMap is specific for the Intel TDX platform and mounts the PCCS configuration file onto the trustee container filesystem. It’s boiler-plate configuration and it works out-of-the-box.

trusteeconfig-auth-secret

This Secret resource automatically mounts a private/public key pair onto the trustee container’s filesystem. An administrator possessing the corresponding private key can then invoke the trustee’s admin API to add new secrets or modify policies.

kbsres1

This is a sample Secret for testing purposes. The secret can be retrieved by any attested client.

trusteeconfig-kbs-config

This is the auto-generated KbsConfig custom resource (CR). No need to change it, it works out-of-the-box.

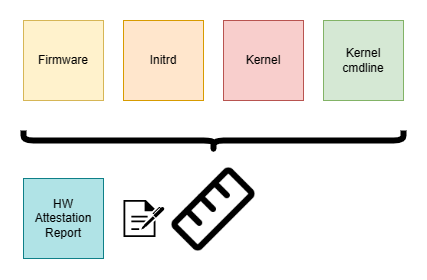

Reference Values

The reference values are an important part of the attestation process. The client collects the measurements (from the running software, the TEE hardware and its firmware) and submits a quote with the claims to the attestation server. These measurements, in order for the attestation protocol to succeed, have to match one of potentially multiple configured valid values that had been registered to Trustee previously. You could also apply flexible rules like “firmware of secure processor > v1.30”, etc. This process guarantees the cVM (confidential VM) is running the expected software stack and that it hasn’t been tampered with.

The following command shows how to edit the reference values in RVPS. Note that a sample list of reference-values is provided by default, because the sample attester doesn’t handle real measurements. According to your HW platform, you’d need to register some real trusted digests instead.

kubectl edit cm -n operators trusteeconfig-rvps-reference-values

Create secrets

How to create secrets to be shared with the attested clients? In this example we create a secret test with two entries. These resources (key1, key2) can be retrieved by the Trustee clients. You can add more secrets as per your requirements.

kubectl create secret generic test --from-literal key1=res1val1 --from-literal key2=res1val2 -n operators

export CR_NAME=$(kubectl get kbsconfig -n operators -o=jsonpath='{.items[0].metadata.name}') && kubectl patch KbsConfig -n operators $CR_NAME --type=json -p='[{"op":"add", "path":"/spec/kbsSecretResources/-", "value":"test"}]'

Resource policy

A resource policy that works out-of-the-box is created by default by TrusteeConfig CR. The policy content depends on the TrusteeConfig profile chosen (permissive or restrictive). Type the following command should you need to customize the default policy.

kubectl edit cm -n operators trusteeconfig-resource-policy

Attestation policy

An attestation policy that works out-of-the-box is created by default by TrusteeConfig CR. It will accept any workload that passes attestation and meets baseline trust claims. Type the following command should you need to customize the default policy.

kubectl edit cm -n operators trusteeconfig-attestation-policy

KbsConfig CR

The KbsConfig custom resource (CR) is automatically generated by the operator. It can be fetched with this command:

KBS_CONFIG=$(kubectl get trusteeconfig trusteeconfig -n operators -o jsonpath='{.status.kbsConfigRef.name}')

Edit the KbsConfig with the following command should you need to provide some optional configuration:

oc edit KbsConfig -n operators $KBS_CONFIG

For example:

# Specify this attribute for enabling DEBUG log in trustee pods

KbsEnvVars:

RUST_LOG: debug

# Number of desired trustee pods. The default is 1, increase the number of replicas to enable High Availability

KbsDeploymentSpec:

replicas: 1

Set Namespace for the context entry

kubectl config set-context --current --namespace=operators

Check if the PODs are running

kubectl get pods -n operators

NAME READY STATUS RESTARTS AGE

trustee-deployment-7bdc6858d7-bdncx 1/1 Running 0 69s

trustee-operator-controller-manager-6c584fc969-8dz2d 1/1 Running 0 4h7m

Also, the log should report something like:

POD_NAME=$(kubectl get pods -l app=kbs -o jsonpath='{.items[0].metadata.name}' -n operators)

kubectl logs -n operators $POD_NAME

[2026-02-10T15:21:47Z INFO kbs] Using config file /etc/kbs-config/kbs-config.toml

[2026-02-10T15:21:47Z INFO tracing::span] Initialize RVPS;

[2026-02-10T15:21:47Z INFO attestation_service::rvps] launch a built-in RVPS.

[2026-02-10T15:21:47Z WARN reference_value_provider_service::extractors] No configuration for SWID extractor provided. Default will be used.

[2026-02-10T15:21:47Z WARN attestation_service::ear_token::broker] Simple Token has been deprecated in v0.16.0. Note that the `attestation_token_broker` config field `type` is now ignored and the token will always be an EAR token.

[2026-02-10T15:21:47Z WARN attestation_service::policy_engine::opa] Policy default_cpu already exists, so the default policy will not be written.

[2026-02-10T15:21:47Z INFO kbs::api_server] Starting HTTPS server at [0.0.0.0:8080]

[2026-02-10T15:21:47Z INFO actix_server::builder] starting 4 workers

[2026-02-10T15:21:47Z INFO actix_server::server] Actix runtime found; starting in Actix runtime

[2026-02-10T15:21:47Z INFO actix_server::server] starting service: "actix-web-service-0.0.0.0:8080", workers: 4, listening on: 0.0.0.0:8080

End-to-End Attestation

We create a pod using an already existing image where the kbs-client is deployed:

kubectl apply -f - << EOF

apiVersion: v1

kind: Pod

metadata:

name: kbs-client

namespace: operators

spec:

containers:

- name: kbs-client

image: quay.io/confidential-containers/kbs-client:v0.17.0

imagePullPolicy: IfNotPresent

command:

- sleep

- "360000"

env:

- name: RUST_LOG

value: none

EOF

Finally we are able to test the entire attestation protocol, when fetching one of the aforementioned secret (HTTPS enabled).

Note: Make sure the resource-policy is permissive for testing purposes. For example:

package policy

default allow = true

kubectl get secret trustee-tls-cert -n operators -o json | jq -r '.data."tls.crt"' | base64 --decode > https.crt

kubectl cp -n operators https.crt kbs-client:/

kubectl exec -it -n operators kbs-client -- kbs-client --cert-file https.crt --url https://kbs-service:8080 get-resource --path default/kbsres1/key1

cmVzMXZhbDE=

If we type the command:

echo cmVzMXZhbDE= | base64 -d

We’ll get res1val1, the secret we created before.

Summary

In this blog we have shown how to use the Trustee operator for deploying Trustee and run the attestation workflow with a sample attester.

How Switchboard Oracles Leverage Confidential Containers for Next-Generation Web3 Security

The Web3 ecosystem depends on oracles to bridge on-chain smart contracts with off-chain data. However, this critical infrastructure has historically faced significant security challenges. Today, we’re excited to share how Switchboard has integrated Confidential Containers (CoCo) with AMD SEV-SNP technology to create a groundbreaking security model for their decentralized oracle network.

The Oracle Security Challenge

Oracles serve as the eyes and ears of blockchain applications, providing essential data that powers DeFi protocols, prediction markets, gaming applications, and more. With billions of dollars depending on accurate oracle data, security isn’t optional—it’s existential.

Traditional oracle solutions face several key vulnerabilities:

- Infrastructure-level attacks from privileged users like cloud administrators

- Memory inspection that could reveal sensitive data during processing

- Lack of verifiable attestation to prove computations occur in a secure environment

- Centralization risks when security requirements limit who can run infrastructure

Enter Confidential Containers

Confidential Containers extends the isolation properties of Kata Containers with confidential computing capabilities. For Switchboard, this combination provides the ideal foundation for their security-critical oracle infrastructure.

How It Works

Switchboard’s implementation uses AMD EPYC processors with SEV-SNP (Secure Encrypted Virtualization-Secure Nested Paging) technology to create hardware-enforced trusted execution environments. This technology:

- Encrypts all memory contents of the container, protecting data-in-use from privileged attackers

- Provides cryptographic attestation that oracle code runs in a genuine, unmodified environment

- Maintains performance while adding security guarantees

- Enables secure decentralization through their Node Partners program

The integration builds on Switchboard’s previous work with Kata Containers, adding the critical confidential computing layer that protects against an entire class of infrastructure-level attacks.

Implementation Journey

While Confidential Containers offers tremendous security benefits, implementing it for production use required collaboration with the CoCo community. The Switchboard team worked through several challenges:

- Extending CoCo’s capabilities through custom forks to meet specific oracle security requirements

- Optimizing performance for the low-latency needs of oracle data delivery

- Creating verification mechanisms to ensure node partners run the attested confidential environments

- Developing operational procedures for secure key management within the confidential environment

“The Confidential Containers community has been instrumental in helping us implement this technology for our production environment,” says the Switchboard team. “Their guidance helped us navigate the complexities of confidential computing while maintaining the performance our oracle network requires.”

Technical Architecture

At a high level, Switchboard’s implementation includes:

- AMD SEV-SNP enabled hosts running on bare metal with EPYC CPUs

- Confidential Containers runtime providing the trusted execution environment

- Remote attestation service verifying the authenticity of oracle environments

- Secure data processing pipeline that handles oracle requests within the protected memory

- Blockchain integration layer that submits verified data on-chain

This architecture ensures that from the moment data enters the oracle system until it’s cryptographically signed and submitted on-chain, it remains protected by hardware-enforced security boundaries.

The Road Ahead

Switchboard’s integration with Confidential Containers represents just the beginning of their confidential computing journey. Future plans include:

- Support for Intel TDX (Trust Domain Extensions) to broaden hardware compatibility

- Enhanced attestation mechanisms for multi-party verification

- Performance optimizations for specific oracle workloads

- Expanded node partner program to further decentralize the network

Conclusion

The integration of Switchboard Oracles with Confidential Containers demonstrates how cutting-edge confidential computing technology can address the unique security challenges of Web3 infrastructure. By protecting sensitive oracle operations with hardware-enforced memory encryption and attestation, Switchboard is setting a new standard for oracle security.

As the Switchboard Node Partners program goes live on March 11, 2025, this technology will enable a truly decentralized oracle network that doesn’t compromise on security—a critical advancement for the entire Web3 ecosystem.

For more information about Confidential Containers, visit confidentialcontainers.org. To learn more about Switchboard’s oracle network, check out their documentation.

Confidential Containers(CoCo) and Supply-chain Levels for Software Artifacts (SLSA)

A few of us in the CNCF Confidential Containers (CoCo) community met some time ago to discuss provenance management in the project.

One of our focus items was to introduce support for generating SLSA provenance and SBOM for CoCo artifacts. We are happy to say that the CoCo project has started generating and verifying the provenance of its release artifacts according to the SLSA standard.

This post is a summary of the progress made to date and the work that is remaining.

About SLSA

SLSA is an incrementally adoptable set of guidelines for supply chain integrity. Integrity means protection against tampering or unauthorized modification at any stage of the software lifecycle. Its specifications are helpful for both software producers and consumers: producers can follow the SLSA guidelines to make their software supply chain more secure, and consumers can use SLSA to decide whether to trust a software package.

The following diagram depicts several known points of attacks.

Src: https://slsa.dev

Note that SLSA does not currently address all of the threats presented here. For example, SLSA v1.0 does not address source threats. SLSA addresses build threats. SLSA also mitigates dependency threats when you verify your dependencies’ SLSA provenance.

SLSA Levels

SLSA structures itself into a series of levels that provide progressively stronger security guarantees for the supply chain.

These levels are divided into tracks, each focusing on a specific aspect of supply chain security. The tracks allow for the evolution of the SLSA specifications, enabling the introduction of new tracks without affecting the validity of existing levels.

SLSA Requirements for Build Levels

The build requirements are listed below.

| Track/Level | Requirements | Focus |

|---|---|---|

| Build L0 | (none) | (n/a) |

| Build L1 | Provenance showing how the package was built | Mistakes, documentation |

| Build L2 | Signed provenance, generated by a hosted build platform | Tampering after the build |

| Build L3 | Hardened build platform | Tampering during the build |

Src: https://slsa.dev/spec/v1.0/levels

For additional details on SLSA we recommend you to go through the official website: https://slsa.dev

What does SLSA do for CoCo?

Confidential containers get deployed into a Trusted Execution Environment (TEE) driven by a small set of critical infrastructure components like kata-agent and attestation-agent running on top of a custom-tailored Linux system. The sum of the software in such a TEE we consider to be a Trusted Computing Base (TCB).

We have to trust the TCB not to perform any nefarious or undesired actions that would compromise the confidentiality of the data entrusted to the TEE. This has two immediate implications:

- We want to keep the software footprint in TCB as small as possible

Less is not just more in this context. It’s imperative because we cannot reasonably establish trust in a large body of software. Each addition to the software surface opens a vector for potential attacks on confidentiality, and we need to scrutinize it for such problems.

- We have to be conscious about how we derive our trust

To trust a TCB, we need to know how it has been assembled, specifically from which components and source code and in which environments it has been built. For an opaque component of questionable and untraceable provenance, it’s hard to make a claim about its trustworthiness. A maximalist argument could be made for not providing any binaries but only auditable source code and build instructions.

This is a completely valid strategy for a particular class of consumers; however, for many users, it’s not a practical option to test or adopt CoCo for their use cases. In some deployment options, the CoCo project trusts specific upstream dependencies (like the Fedora Project) to provide legitimate artifacts. It also reuses artifacts built in specialized repositories and shared across the project. For the latter scenario, we employed SLSA attestation to establish trust in the components of our TCB.

CoCo Build Level

Currently the CoCo project is at Build L2.

The CoCo project involves different sub-projects/components. It uses the attest-build-provenance GitHub action to generate provenance. It generates signed SLSA provenance in the in-toto format. You can verify the provenance using the attestation command in the GitHub CLI.

It’s essential to understand Attestation and Provenance in the context of SLSA. Attestation refers to an authenticated statement (metadata) about a software artefact or collection of software artifacts. Provenance is the Attestation (metadata) describing how the outputs were produced, including identifying the platform and external parameters.

Following is the current state of provenance generation of the CoCo projects:

| Component | Provenance Generation | Remarks |

|---|---|---|

| kata-containers | GHA workflow | |

| guest-components | GHA workflow | |

| cloud-api-adaptor | GHA workflow | Provenance is for the generated qcow2 image |

| enclave-cc | TBD | |

| coco operator | TBD | |

| trustee | TBD | |

| trustee-operator | TBD |

Artifacts in OCI registries

Formerly exclusively a place to host layers for container images, OCI registries today can serve a multitude of use cases, such as Helm Charts or Attestation data. A registry is a content-addressable store, which means that it is named after a digest of its content.

$ DIGEST="84ec2a70279219a45d327ec1f2f112d019bc9dcdd0e19f1ba7689b646c2de0c2"

$ oras manifest fetch "quay.io/curl/curl@sha256:${DIGEST}" | sha256sum

84ec2a70279219a45d327ec1f2f112d019bc9dcdd0e19f1ba7689b646c2de0c2 -

We also use OCI registries to distribute and cache artifacts in the CoCo project.

There is a convention of specifying upstream dependencies in a versions.yaml file this:

oci:

...

kata-containers:

registry: ghcr.io/kata-containers/cached-artefacts

reference: 3.13.0

guest-components:

registry: ghcr.io/confidential-containers/guest-components

reference: 3df6c412059f29127715c3fdbac9fa41f56cfce4

Note that the reference in this case is a tag, sometimes a version, and sometimes a reference to the digest of a given git commit, not the digest of the OCI artefact. What do we express from this specification, and what do we want to verify?

We might want to resolve a tag to a git digest first, so tag 3.13.0 resolves to the digest 2777b13db748f9ba785c7d2be4fcb6ac9c9af265. Knowing the git digest, we now want to verify that official repository runners built the artefact from the main branch using the source code and build workflows from that above digest.

This is what the SLSA attestation that we created during the artefact’s build can substantiate.

It wouldn’t make sense to attest against an artifact using an OCI tag alias (those are not immutable, and one can move it to point to something else); the attestations are tied to an OCI artifact referenced by its digest and conveniently stored alongside this in the same repo. We can find it manually if we search for referrers of our OCI artifact.

$ GIT_DGST="2777b13db748f9ba785c7d2be4fcb6ac9c9af265"

$ oras resolve "ghcr.io/kata-containers/cached-artefacts/agent:${GIT_DGST}-x86_64"

sha256:c127db93af2fcefddebbe98013e359a7c30b9130317a96aab50093af0dbe8464

$ OCI_DGST=sha256:c127db93af2fcefddebbe98013e359a7c30b9130317a96aab50093af0dbe8464

$ oras discover "ghcr.io/kata-containers/cached-artefacts/agent@OCI_DGST"

...

└── application/vnd.dev.sigstore.bundle.v0.3+json

└── sha256:f93cc5a59ec0b9d23482831e1ce52bb3298c168da5c2d333a33118280f1f6d5b

Provenance Verification

As mentioned previously, Github provides a command line option to verify the provenance.

The following snippet from the cloud-api-adaptor project’s Makefile shows an example usage:

define pull_agent_artifact

$(eval $(call generate_tag,tag,$(KATA_REF),$(ARCH)))

$(eval OCI_IMAGE := $(KATA_REGISTRY)/agent)

$(eval OCI_DIGEST := $(shell oras resolve $(OCI_IMAGE):${tag}))

$(eval OCI_REF := $(OCI_IMAGE)@$(OCI_DIGEST))

$(if $(filter yes,$(VERIFY_PROVENANCE)),$(ROOT_DIR)hack/verify-provenance.sh \

-a $(OCI_REF) \

-r $(KATA_REPO) \

-d $(KATA_COMMIT))

oras pull $(OCI_REF)

endef

The verify-provenance.sh script is a utility helper for the gh attestation verify command. It encodes the requirements we made about a valid build above.

The source is available here.

Next Steps

To further improve the security posture of the CoCo project, we aim to integrate provenance generation and verification processes for other sub-projects, such as the CoCo operator and trustee.

We welcome your feedback and contributions—join our community and be part of the conversation!

Confidential Containers without confidential hardware

Note This blog post was originally published here based on the very first versions of Confidential Containers (CoCo) which at that time was just a Proof-of-Concept (PoC) project. Since then the project evolved a lot: we managed to merge the work to the Kata Containers mainline, removed a code branch of Containerd, many new features were introduced/improved, new sub-projects emerged and the community finally reached its maturity. Thus, this new version of that blog post revisits the installation and use of CoCo on workstations without confidential hardware, taking into consideration the changes since the early versions of the project.

Introduction

The Confidential Containers (CoCo) project aims to implement a cloud-native solution for confidential computing using the most advanced trusted execution environments (TEE) technologies available from hardware vendors like AMD, IBM and Intel.

The community recognizes that not every developer has access to TEE-capable machines and we don’t want this to be a blocker for contributions. So version 0.10.0 and later come with a custom runtime that lets developers play with CoCo on either a simple virtual or bare-metal machine.

In this tutorial you will learn:

- How to install CoCo and create a simple confidential pod on Kubernetes

- The main features that keep your pod confidential

Since we will be using a custom runtime environment without confidential hardware, we will not be able to do real attestation implemented by CoCo, but instead will use a sample verifier, so the pod created won’t be strictly “confidential”.

A brief introduction to Confidential Containers

Confidential Containers is a sandbox project of the Cloud Native Computing Foundation (CNCF) that enables cloud-native confidential computing by taking advantage of a variety of hardware platforms and technologies, such as Intel SGX, Intel TDX, AMD SEV-SNP and IBM Secure Execution for Linux. The project aims to integrate hardware and software technologies to deliver a seamless experience to users running applications on Kubernetes.

For a high level overview of the CoCo project, please see: What is the Confidential Containers project?

What is required for this tutorial?

As mentioned above, you don’t need TEE-capable hardware for this tutorial. You will only be required to have:

- Ubuntu 22.04 virtual or bare-metal machine with a minimum of 8GB RAM and 4 vcpus

- Kubernetes 1.30.1 or above

It is beyond the scope of this blog to tell you how to install Kubernetes, but there are some details that should be taken into consideration:

- CoCo v0.10.0 was tested on Continuous Integration (CI) with Kubernetes installed via kubeadm (see here how to create a cluster with that tool). Also, some community members reported that it works fine in Kubernetes over kind.

- Containerd is supported and CRI-O still lack some features (e.g. encrypted images). On CI, most of the tests were executed on Kubernetes configured with Containerd, so this is the chosen container runtime for this blog.

- Ensure that your cluster nodes are not tainted with

NoSchedule, otherwise the installation will fail. This is very common on single-node Kubernetes installed with kubeadm. - Ensure that the worker nodes where CoCo will be installed have SELinux disabled as this is a current limitation (refer to the v0.10.0 limitations for further details).

How to install Confidential Containers

The CoCo runtime is bundled in a Kubernetes operator that should be deployed on your cluster.

In this section you will learn how to get the CoCo operator installed.

First, you should have the node.kubernetes.io/worker= label on all the cluster nodes that you want the runtime installed on. This is how the cluster admin instructs the operator controller about what nodes, in a multi-node cluster, need the runtime. Use the command kubectl label node NODE_NAME "node.kubernetes.io/worker=" as on the listing below to add the label:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

coco-demo Ready control-plane 87s v1.30.1

$ kubectl label node "coco-demo" "node.kubernetes.io/worker="

node/coco-demo labeled

Once the target worker nodes are properly labeled, the next step is to install the operator controller. You should first ensure that SELinux is disabled or in permissive mode, however, because the operator controller will attempt to restart services in your system and SELinux may deny that. Using the following sequence of commands we set SELinux to permissive and install the operator controller:

$ sudo setenforce 0

$ kubectl apply -k github.com/confidential-containers/operator/config/release?ref=v0.10.0

This will create a series of resources in the confidential-containers-system

namespace. In particular, it creates a deployment with pods that all need to be running before you continue the installation, as shown below:

$ kubectl get pods -n confidential-containers-system

NAME READY STATUS RESTARTS AGE

cc-operator-controller-manager-557b5cbdc5-q7wk7 2/2 Running 0 2m42s

The operator controller is capable of managing the installation of different CoCo runtimes through Kubernetes custom resources. In v0.10.0 release, the following runtimes are supported:

- ccruntime - the default, Kata Containers based implementation of CoCo. This is the runtime that we will use here.

- enclave-cc - provides process-based isolation using Intel SGX

Now it is time to install the ccruntime runtime. You should run the following commands and wait a few minutes while it downloads and installs Kata Containers and configures your node for CoCo:

$ kubectl apply -k github.com/confidential-containers/operator/config/samples/ccruntime/default?ref=v0.10.0

ccruntime.confidentialcontainers.org/ccruntime-sample created

$ kubectl get pods -n confidential-containers-system --watch

NAME READY STATUS RESTARTS AGE

cc-operator-controller-manager-557b5cbdc5-q7wk7 2/2 Running 0 26m

cc-operator-daemon-install-q27qz 1/1 Running 0 8m10s

cc-operator-pre-install-daemon-d55v2 1/1 Running 0 8m35s

You can notice that it will get installed a couple of Kubernetes runtimeclasses as shown on the listing below. Each class defines a container runtime configuration as, for example, kata-qemu-tdx should be used to launch QEMU/KVM for Intel TDX hardware (similarly kata-qemu-snp for AMD SEV-SNP). For the purpose of creating a confidential pod in a non-TEE environment we will be using the kata-qemu-coco-dev runtime class.

$ kubectl get runtimeclasses

NAME HANDLER AGE

kata kata-qemu 26m

kata-clh kata-clh 26m

kata-qemu kata-qemu 26m

kata-qemu-coco-dev kata-qemu-coco-dev 26m

kata-qemu-sev kata-qemu-sev 26m

kata-qemu-snp kata-qemu-snp 26m

kata-qemu-tdx kata-qemu-tdx 26m

Creating your first confidential pod

In this section we will create the bare-minimum confidential pod using a regular busybox image. Later on we will show how to use encrypted container images.

You should create the coco-demo-01.yaml file with the content:

---

apiVersion: v1

kind: Pod

metadata:

name: coco-demo-01

annotations:

"io.containerd.cri.runtime-handler": "kata-qemu-coco-dev"

spec:

runtimeClassName: kata-qemu-coco-dev

containers:

- name: busybox

image: quay.io/prometheus/busybox:latest

imagePullPolicy: Always

command:

- sleep

- "infinity"

restartPolicy: Never

Then you should apply that manifest and wait for the pod to be RUNNING as shown below:

$ kubectl apply -f coco-demo-01.yaml

pod/coco-demo-01 created

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

coco-demo-01 1/1 Running 0 24s

Congrats! Your first Confidential Containers pod has been created and you don’t need confidential hardware!

A view of what’s going on behind the scenes

In this section we’ll show you some concepts and details of the CoCo implementation that can be demonstrated with this simple coco-demo-01 pod. Later we should be creating more complex and interesting examples.

Containers inside a confidential virtual machine (CVM)

Our confidential containers implementation is built on Kata Containers, whose most notable feature is running the containers in a virtual machine (VM), so the created demo pod is naturally isolated from the host kernel.

Currently CoCo supports launching pods with QEMU only, despite Kata Containers supporting other hypervisors. An instance of QEMU was launched to run the coco-demo-01, as you can see below:

$ ps aux | grep /opt/kata/bin/qemu-system-x86_64

root 15892 0.8 3.6 2648004 295424 ? Sl 20:36 0:04 /opt/kata/bin/qemu-system-x86_64 -name sandbox-baabb31ff0c798a31bca7373f2abdbf2936375a5729a3599799c0a225f3b9612 -uuid e8a3fb26-eafa-4d6b-b74e-93d0314b6e35 -machine q35,accel=kvm,nvdimm=on -cpu host,pmu=off -qmp unix:fd=3,server=on,wait=off -m 2048M,slots=10,maxmem=8961M -device pci-bridge,bus=pcie.0,id=pci-bridge-0,chassis_nr=1,shpc=off,addr=2,io-reserve=4k,mem-reserve=1m,pref64-reserve=1m -device virtio-serial-pci,disable-modern=true,id=serial0 -device virtconsole,chardev=charconsole0,id=console0 -chardev socket,id=charconsole0,path=/run/vc/vm/baabb31ff0c798a31bca7373f2abdbf2936375a5729a3599799c0a225f3b9612/console.sock,server=on,wait=off -device nvdimm,id=nv0,memdev=mem0,unarmed=on -object memory-backend-file,id=mem0,mem-path=/opt/kata/share/kata-containers/kata-ubuntu-latest-confidential.image,size=268435456,readonly=on -device virtio-scsi-pci,id=scsi0,disable-modern=true -object rng-random,id=rng0,filename=/dev/urandom -device virtio-rng-pci,rng=rng0 -device vhost-vsock-pci,disable-modern=true,vhostfd=4,id=vsock-1515224306,guest-cid=1515224306 -netdev tap,id=network-0,vhost=on,vhostfds=5,fds=6 -device driver=virtio-net-pci,netdev=network-0,mac=6a:e6:eb:34:52:32,disable-modern=true,mq=on,vectors=4 -rtc base=utc,driftfix=slew,clock=host -global kvm-pit.lost_tick_policy=discard -vga none -no-user-config -nodefaults -nographic --no-reboot -object memory-backend-ram,id=dimm1,size=2048M -numa node,memdev=dimm1 -kernel /opt/kata/share/kata-containers/vmlinuz-6.7-136-confidential -append tsc=reliable no_timer_check rcupdate.rcu_expedited=1 i8042.direct=1 i8042.dumbkbd=1 i8042.nopnp=1 i8042.noaux=1 noreplace-smp reboot=k cryptomgr.notests net.ifnames=0 pci=lastbus=0 root=/dev/pmem0p1 rootflags=dax,data=ordered,errors=remount-ro ro rootfstype=ext4 console=hvc0 console=hvc1 quiet systemd.show_status=false panic=1 nr_cpus=4 selinux=0 systemd.unit=kata-containers.target systemd.mask=systemd-networkd.service systemd.mask=systemd-networkd.socket scsi_mod.scan=none -pidfile /run/vc/vm/baabb31ff0c798a31bca7373f2abdbf2936375a5729a3599799c0a225f3b9612/pid -smp 1,cores=1,threads=1,sockets=4,maxcpus=4

The launched kernel (/opt/kata/share/kata-containers/vmlinuz-6.7-136-confidential) and guest image (/opt/kata/share/kata-containers/kata-ubuntu-latest-confidential.image), as well as QEMU (/opt/kata/bin/qemu-system-x86_64) were all installed on the host system by the CoCo operator runtime.

If you run uname -a inside the coco-demo-01 and compare with the value obtained from the host then you will notice the container is isolated by a different kernel, as shown below:

$ kubectl exec coco-demo-01 -- uname -a

Linux 6.7.0 #1 SMP Mon Sep 9 09:48:13 UTC 2024 x86_64 GNU/Linux

$ uname -a

Linux coco-demo 5.15.0-97-generic #107-Ubuntu SMP Wed Feb 7 13:26:48 UTC 2024 x86_64 x86_64 x86_64 GNU/Linux

If you were running on a platform with supported TEE then you would be able to check if the VM is enabled with confidential features, for example, memory and registers state encryption as well as hardware-based measurement and attestation.

Inside the VM, there is an agent (Kata Agent) process which responds to requests from the Kata Containers runtime to manage the containers’ lifecycle. In the next sections, we explain how that agent cooperates with other elements of the architecture to increase the confidentiality of the workload.

The host cannot see the container image

Oversimplifying, in a normal Kata Containers pod the container image is pulled by the container runtime on the host and is mounted inside the VM. The CoCo implementation changes that behavior through a chain of delegations so that the image is directly pulled from the guest VM, resulting in the host having no access to its content (except for some metadata).

If you have the ctr command in your environment then you can check that only the quay.io/prometheus/busybox’s manifest was cached in containerd’s storage as well as no rootfs directory exists in /run/kata-containers/shared/sandboxes/<pod id> as shown below:

$ sudo ctr -n "k8s.io" image check name==quay.io/prometheus/busybox:latest

REF TYPE DIGEST STATUS SIZE UNPACKED

quay.io/prometheus/busybox:latest application/vnd.docker.distribution.manifest.list.v2+json sha256:dfa54ef35e438b9e71ac5549159074576b6382f95ce1a434088e05fd6b730bc4 incomplete (1/3) 1.0 KiB/1.2 MiB false

$ sudo find /run/kata-containers/shared/sandboxes/baabb31ff0c798a31bca7373f2abdbf2936375a5729a3599799c0a225f3b9612/

/run/kata-containers/shared/sandboxes/baabb31ff0c798a31bca7373f2abdbf2936375a5729a3599799c0a225f3b9612/

/run/kata-containers/shared/sandboxes/baabb31ff0c798a31bca7373f2abdbf2936375a5729a3599799c0a225f3b9612/mounts

/run/kata-containers/shared/sandboxes/baabb31ff0c798a31bca7373f2abdbf2936375a5729a3599799c0a225f3b9612/shared

It is worth mentioning that not caching on the host has its downside, as images cannot be shared across pods, thus impacting containers brings up performance. This is an area that the CoCo community will be addressing with a better solution in upcoming releases.

Going towards confidentiality

In this section we will increase the complexity of the pod and the configuration of CoCo to showcase more features.

Adding Kata Containers agent policies

Points if you noticed on the coco-demo-01 pod example that the host owner can execute arbitrary commands in the container, potentially stealing sensitive data, which obviously goes against the confidentiality mantra of “never trust the host”. Hopefully this operation and others default behaviors can be configured by using the Kata Containers agent policy mechanism.

As an example, let’s show how to block the ExecProcessRequest endpoint of the kata-agent to deny the execution of commands in the container. First you need to encode in base64 a Rego policy file as shown below:

$ curl -s https://raw.githubusercontent.com/kata-containers/kata-containers/refs/heads/main/src/kata-opa/allow-all-except-exec-process.rego | base64 -w 0

IyBDb3B5cmlnaHQgKGMpIDIwMjMgTWljcm9zb2Z0IENvcnBvcmF0aW9uCiMKIyBTUERYLUxpY2Vuc2UtSWRlbnRpZmllcjogQXBhY2hlLTIuMAojCgpwYWNrYWdlIGFnZW50X3BvbGljeQoKZGVmYXVsdCBBZGRBUlBOZWlnaGJvcnNSZXF1ZXN0IDo9IHRydWUKZGVmYXVsdCBBZGRTd2FwUmVxdWVzdCA6PSB0cnVlCmRlZmF1bHQgQ2xvc2VTdGRpblJlcXVlc3QgOj0gdHJ1ZQpkZWZhdWx0IENvcHlGaWxlUmVxdWVzdCA6PSB0cnVlCmRlZmF1bHQgQ3JlYXRlQ29udGFpbmVyUmVxdWVzdCA6PSB0cnVlCmRlZmF1bHQgQ3JlYXRlU2FuZGJveFJlcXVlc3QgOj0gdHJ1ZQpkZWZhdWx0IERlc3Ryb3lTYW5kYm94UmVxdWVzdCA6PSB0cnVlCmRlZmF1bHQgR2V0TWV0cmljc1JlcXVlc3QgOj0gdHJ1ZQpkZWZhdWx0IEdldE9PTUV2ZW50UmVxdWVzdCA6PSB0cnVlCmRlZmF1bHQgR3Vlc3REZXRhaWxzUmVxdWVzdCA6PSB0cnVlCmRlZmF1bHQgTGlzdEludGVyZmFjZXNSZXF1ZXN0IDo9IHRydWUKZGVmYXVsdCBMaXN0Um91dGVzUmVxdWVzdCA6PSB0cnVlCmRlZmF1bHQgTWVtSG90cGx1Z0J5UHJvYmVSZXF1ZXN0IDo9IHRydWUKZGVmYXVsdCBPbmxpbmVDUFVNZW1SZXF1ZXN0IDo9IHRydWUKZGVmYXVsdCBQYXVzZUNvbnRhaW5lclJlcXVlc3QgOj0gdHJ1ZQpkZWZhdWx0IFB1bGxJbWFnZVJlcXVlc3QgOj0gdHJ1ZQpkZWZhdWx0IFJlYWRTdHJlYW1SZXF1ZXN0IDo9IHRydWUKZGVmYXVsdCBSZW1vdmVDb250YWluZXJSZXF1ZXN0IDo9IHRydWUKZGVmYXVsdCBSZW1vdmVTdGFsZVZpcnRpb2ZzU2hhcmVNb3VudHNSZXF1ZXN0IDo9IHRydWUKZGVmYXVsdCBSZXNlZWRSYW5kb21EZXZSZXF1ZXN0IDo9IHRydWUKZGVmYXVsdCBSZXN1bWVDb250YWluZXJSZXF1ZXN0IDo9IHRydWUKZGVmYXVsdCBTZXRHdWVzdERhdGVUaW1lUmVxdWVzdCA6PSB0cnVlCmRlZmF1bHQgU2V0UG9saWN5UmVxdWVzdCA6PSB0cnVlCmRlZmF1bHQgU2lnbmFsUHJvY2Vzc1JlcXVlc3QgOj0gdHJ1ZQpkZWZhdWx0IFN0YXJ0Q29udGFpbmVyUmVxdWVzdCA6PSB0cnVlCmRlZmF1bHQgU3RhcnRUcmFjaW5nUmVxdWVzdCA6PSB0cnVlCmRlZmF1bHQgU3RhdHNDb250YWluZXJSZXF1ZXN0IDo9IHRydWUKZGVmYXVsdCBTdG9wVHJhY2luZ1JlcXVlc3QgOj0gdHJ1ZQpkZWZhdWx0IFR0eVdpblJlc2l6ZVJlcXVlc3QgOj0gdHJ1ZQpkZWZhdWx0IFVwZGF0ZUNvbnRhaW5lclJlcXVlc3QgOj0gdHJ1ZQpkZWZhdWx0IFVwZGF0ZUVwaGVtZXJhbE1vdW50c1JlcXVlc3QgOj0gdHJ1ZQpkZWZhdWx0IFVwZGF0ZUludGVyZmFjZVJlcXVlc3QgOj0gdHJ1ZQpkZWZhdWx0IFVwZGF0ZVJvdXRlc1JlcXVlc3QgOj0gdHJ1ZQpkZWZhdWx0IFdhaXRQcm9jZXNzUmVxdWVzdCA6PSB0cnVlCmRlZmF1bHQgV3JpdGVTdHJlYW1SZXF1ZXN0IDo9IHRydWUKCmRlZmF1bHQgRXhlY1Byb2Nlc3NSZXF1ZXN0IDo9IGZhbHNlCg==

Then you pass the policy to the runtime via io.katacontainers.config.agent.policy pod annotation. You should create the coco-demo-02.yaml file with the content:

---

apiVersion: v1

kind: Pod

metadata:

name: coco-demo-02

annotations:

"io.containerd.cri.runtime-handler": "kata-qemu-coco-dev"