Confidential Containers is an open source project that brings confidential computing to Cloud Native environments, leveraging hardware technology to protect complex workloads. Confidential Containers is a CNCF sandbox project.

This is the multi-page printable view of this section. Click here to print.

Documentation

- 1: Overview

- 2: Getting Started

- 2.1: Prerequisites

- 2.1.1: Hardware Requirements

- 2.1.1.1: CoCo without Hardware

- 2.1.1.2: Secure Execution Host Setup

- 2.1.1.3: SEV-SNP Host Setup

- 2.1.1.4: SGX Host Setup

- 2.1.1.5: TDX Host Setup

- 2.1.2: Cloud Hardware

- 2.1.3: Cluster Setup

- 2.2: Installation

- 2.3: Simple Workload

- 3: Architecture

- 3.1: Design Overview

- 3.2: Trust Model

- 3.2.1: Trust Model for Confidential Containers

- 3.2.2: Personas

- 4: Features

- 4.1: Get Attestation

- 4.2: Get Secret Resources

- 4.3: Authenticated Registries

- 4.4: Encrypted Images

- 4.5: Local Registries

- 4.6: Protected Storage

- 4.7: Sealed Secrets

- 4.8: Signed Images

- 5: Attestation

- 5.1: CoCo Setup

- 5.2: Installation

- 5.2.1: Trustee Operator

- 5.2.2: Trustee in Docker

- 5.3: KBS Client Tool

- 5.4: Resources

- 5.4.1: Resource Backends

- 5.4.2: Azure Key Vault Integration

- 5.5: Policies

- 5.6: Reference Values

- 6: Examples

- 6.1: AWS

- 6.2: Azure

- 6.3: Container Launch with SNP Memory Encryption

- 6.4: GCP

- 7: Use Cases

- 7.1: Confidential AI

- 7.2: Switchboard Oracles: Securing Web3 Data with Confidential Containers

- 7.3: Secure Supply Chain

- 8: Troubleshooting

- 9: Contributing

1 - Overview

What is the Confidential Containers project?

Confidential Containers encapsulates pods inside of confidential virtual machines, allowing Cloud Native workloads to leverage confidential computing hardware with minimal modification.

Confidential Containers extends the guarantees of confidential computing to complex workloads. With Confidential Containers, sensitive workloads can be run on untrusted hosts and be protected from compromised or malicious users, software, and administrators.

Confidential Containers provides an end-to-end framework for deploying workloads, attesting them, and provisioning secrets.

What hardware does Confidential Containers support?

On bare metal Confidential Containers supports the following platforms:

| Platform | Supports Attestation | Uses Kata |

|---|---|---|

| Intel TDX | Yes | Yes |

| Intel SGX | Yes | No |

| AMD SEV-SNP | Yes | Yes |

| AMD SEV(-ES) | No | Yes |

| IBM Secure Execution | Yes | Yes |

Confidential Containers can also be deployed in a cloud environment using the

cloud-api-adaptor.

The following platforms are supported.

| Platform | Cloud | Notes |

|---|---|---|

| SNP | Azure | |

| TDX | Azure | |

| Secure Execution | IBM | |

| None | AWS | Under development |

| SNP | GCP | |

| TDX | GCP | Under development |

| None | LibVirt | For local testing |

Confidential Containers provides an attestation and key-management engine, called Trustee which is able to attest the following platforms:

| Platform |

|---|

| AMD SEV-SNP |

| Intel TDX |

| Intel SGX |

| AMD SEV-SNP with Azure vTPM |

| Intel TDX with Azure vTPM |

| IBM Secure Execution |

| ARM CCA |

| Hygon CSV |

Trustee can be used with Confidential Containers or to attest standalone confidential guests.

See Attestation section for more information.

2 - Getting Started

This section will describe hardware and software prerequisites, installing Confidential Containers with an operator, verifying the installation, and running a pod with Confidential Containers.

2.1 - Prerequisites

This section will describe hardware and software prerequisites, installing Confidential Containers with an operator, verifying the installation, and running a pod with Confidential Containers.

2.1.1 - Hardware Requirements

Confidential Computing is a hardware technology. Confidential Containers supports multiple hardware platforms and can leverage cloud hardware. If you do not have bare metal hardware and will deploy Confidential Containers with a cloud integration, continue to the cloud section.

You can also run Confidential Containers without hardware support for testing or development.

The Confidential Containers operator, which is described in the following section, does not setup the host kernel, firmware, or system configuration. Before installing Confidential Containers on a bare metal system, make sure that your node can start confidential VMs.

This section will describe the configuration that is required on the host.

Regardless of your platform, it is recommended to have at least 8GB of RAM and 4 cores on your worker node.

2.1.1.1 - CoCo without Hardware

For testing or development, Confidential Containers can be deployed without any hardware support.

This is referred to as a coco-dev or non-tee.

A coco-dev deployment functions the same way as Confidential Containers

with an enclave, but a non-confidential VM is used instead of a confidential VM.

This does not provide any security guarantees, but it can be used for testing.

No additional host configuration is required as long as the host supports virtualization.

2.1.1.2 - Secure Execution Host Setup

Platform Setup

This document outlines the steps to configure a host machine to support IBM Secure Execution on IBM Z & LinuxONE platforms. This capability enables enhanced security for workloads by taking advantage of protected virtualization. Ensure the host meets the necessary hardware and software requirements before proceeding.

Hardware Requirements

Supported hardware includes these systems:

- IBM z15 or newer models

- IBM LinuxONE III or newer models

Software Requirements

Additionally, the system must meet specific CPU and kernel configuration requirements. Follow the steps below to verify and enable the Secure Execution capability.

-

Verify Protected Virtualization Support in the Kernel

Run the following command to ensure the kernel supports protected virtualization:

cat /sys/firmware/uv/prot_virt_hostA value of 1 indicates support.

-

Check Ultravisor Memory Reservation

Confirm that the ultravisor has reserved memory during the current boot:

sudo dmesg | grep -i ultravisorExample output:

[ 0.063630] prot_virt.f9efb6: Reserving 98MB as ultravisor base storage -

Validate the Secure Execution Facility Bit

Ensure the required facility bit (158) is present:

cat /proc/cpuinfo | grep 158The facilities field should include 158.

If any required configuration is missing, contact your cloud provider to enable the Secure Execution capability for a machine. Alternatively, if you have administrative privileges and the facility bit (158) is set, you can enable it by modifying kernel parameters and rebooting the system:

-

Modify Kernel Parameters

Update the kernel configuration to include the prot_virt=1 parameter:

sudo sed -i 's/^\(parameters.*\)/\1 prot_virt=1/g' /etc/zipl.conf -

Update the Bootloader and reboot the System

Apply the changes to the bootloader and reboot the system:

sudo zipl -V sudo systemctl reboot -

Repeat the Verification Steps

After rebooting, repeat the verification steps above to ensure Secure Execution is properly enabled.

Additional Notes

- The steps to enable Secure Execution might vary depending on the Linux distributions. Consult your distribution’s documentation if necessary.

- For more detailed information about IBM Secure Execution for Linux, see also the official documentation at IBM Secure Execution for Linux.

2.1.1.3 - SEV-SNP Host Setup

Platform Setup

The host BIOS and kernel must be capable of supporting AMD SEV-SNP and the host must be configured accordingly.

The latest SEV Firmware version is available on AMD’s SEV Developer Webpage. It can also be updated via a platform OEM BIOS update.

The host kernel must be equal to or later than upstream version 6.11.

To build just the upstream compatible host kernel, use the Confidential Containers fork of AMDESE AMDSEV. Individual components can be built by running the following command:

./build.sh kernel host --install

Additionally, sev-utils can be used to install the required host kernel, but it will unnecessarily build AMD compatible guest kernel, OVMF, and QEMU components as these packages are already packaged with Kata. The additional components can be used with the script utility to test launch and attest a base QEMU SNP guest.

2.1.1.4 - SGX Host Setup

TODO

2.1.1.5 - TDX Host Setup

Platform Setup

Additional Notes

- For more detailed information about Intel TDX, see also official documentation.

2.1.2 - Cloud Hardware

Note

If you are using bare metal confidential hardware, you can skip this section.Confidential Containers can be deployed via confidential computing cloud offerings. The main method of doing this is to use the cloud-api-adaptor also known as “peer pods.”

Some clouds also support starting confidential VMs inside of non-confidential VMs. With Confidential Containers these offerings can be used as if they were bare-metal.

2.1.3 - Cluster Setup

Confidential Containers requires Kubernetes. A cluster must be installed before running the operator. Many different clusters can be used but they should meet the following requirements.

- The minimum Kubernetes version is 1.24

- Cluster must use

containerdorcri-o. - At least one node has the label

node.kubernetes.io/worker. - SELinux is not enabled.

If you use Minikube or Kind to setup your cluster, you will only be able to use runtime classes based on Cloud Hypervisor due to an issue with QEMU.

2.2 - Installation

Note

Make sure you have completed the pre-requisites before installing Confidential Containers.Deploy the operator

Deploy the operator by running the following command where <RELEASE_VERSION> needs to be substituted

with the desired release tag.

kubectl apply -k github.com/confidential-containers/operator/config/release?ref=<RELEASE_VERSION>

For example, to deploy the v0.10.0 release run:

kubectl apply -k github.com/confidential-containers/operator/config/release?ref=v0.10.0

Wait until each pod has the STATUS of Running.

kubectl get pods -n confidential-containers-system --watch

Create the custom resource

Creating a custom resource installs the required CC runtime pieces into the cluster node and creates the runtime classes.

kubectl apply -k github.com/confidential-containers/operator/config/samples/ccruntime/default?ref=<RELEASE_VERSION>

kubectl apply -k github.com/confidential-containers/operator/config/samples/ccruntime/s390x?ref=<RELEASE_VERSION>

kubectl apply -k github.com/confidential-containers/operator/config/samples/enclave-cc/hw?ref=<RELEASE_VERSION>

Note

If using enclave-cc with SGX, please refer to this guide for more information on setting the custom resource.Wait until each pod has the STATUS of Running.

kubectl get pods -n confidential-containers-system --watch

Verify Installation

See if the expected runtime classes were created.

kubectl get runtimeclass

Should return

NAME HANDLER AGE

kata kata-qemu 8d

kata-clh kata-clh 8d

kata-qemu kata-qemu 8d

kata-qemu-coco-dev kata-qemu-coco-dev 8d

kata-qemu-sev kata-qemu-sev 8d

kata-qemu-snp kata-qemu-snp 8d

kata-qemu-tdx kata-qemu-tdx 8d

NAME HANDLER AGE

kata kata-qemu 60s

kata-qemu kata-qemu 61s

kata-qemu-se kata-qemu-se 61s

NAME HANDLER AGE

enclave-cc enclave-cc 9m55s

Runtime Classes

CoCo supports many different runtime classes. Different deployment types install different sets of runtime classes. The operator may install some runtime classes that are not valid for your system. For example, if you run the operator on a TDX machine, you might have TDX and SEV runtime classes. Use the runtime classes that match your hardware.

| Name | Type | Description |

|---|---|---|

kata |

x86 | Alias of the default runtime handler (usually the same as kata-qemu) |

kata-clh |

x86 | Kata Containers (non-confidential) using Cloud Hypervisor |

kata-qemu |

x86 | Kata Containers (non-confidential) using QEMU |

kata-qemu-coco-dev |

x86 | CoCo without an enclave (for testing only) |

kata-qemu-sev |

x86 | CoCo with QEMU for AMD SEV HW |

kata-qemu-snp |

x86 | CoCo with QEMU for AMD SNP HW |

kata-qemu-tdx |

x86 | CoCo with QEMU for Intel TDX HW |

kata-qemu-se |

s390x | CoCO with QEMU for Secure Execution |

enclave-cc |

SGX | CoCo with enclave-cc (process-based isolation without Kata) |

2.3 - Simple Workload

Creating a sample Confidential Containers workload

Once you’ve used the operator to install Confidential Containers, you can run a pod with CoCo by simply adding a runtime class.

First, we will use the kata-qemu-coco-dev runtime class which uses CoCo without hardware support.

Initially we will try this with an unencrypted container image.

In this example, we will be using the bitnami/nginx image as described in the following yaml:

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: nginx

annotations:

io.containerd.cri.runtime-handler: kata-qemu-coco-dev

spec:

containers:

- image: bitnami/nginx:1.22.0

name: nginx

dnsPolicy: ClusterFirst

runtimeClassName: kata-qemu-coco-dev

For the most basic workloads, setting the runtimeClassName and runtime-handler annotation is usually

the only requirement for the pod YAML.

Create a pod YAML file as previously described (we named it nginx.yaml) .

Create the workload:

kubectl apply -f nginx.yaml

Output:

pod/nginx created

Ensure the pod was created successfully (in running state):

kubectl get pods

Output:

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 3m50s

3 - Architecture

3.1 - Design Overview

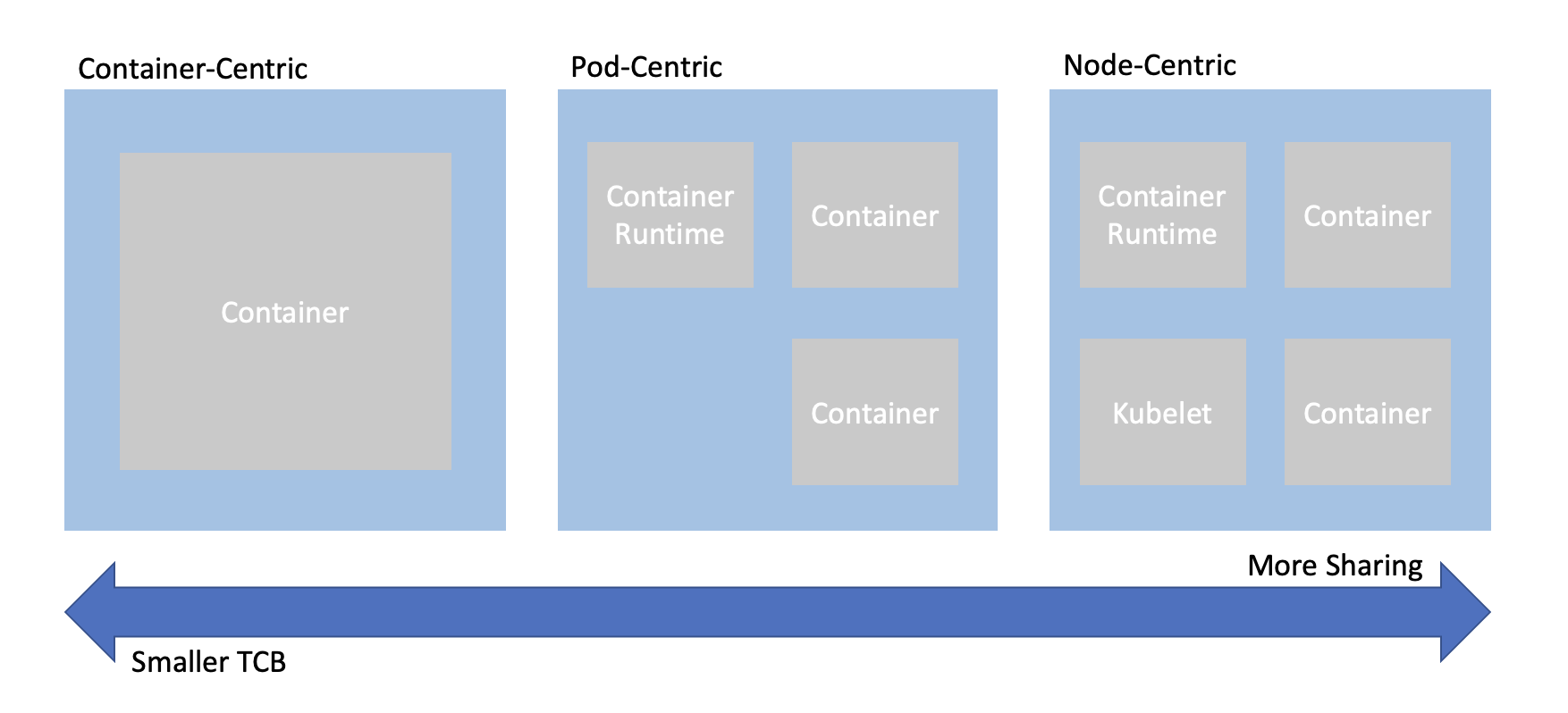

Confidential computing projects are largely defined by what is inside the enclave and what is not. For Confidential Containers, the enclave contains the workload pod and helper processes and daemons that facilitate the workload pod. Everything else, including the hypervisor, other pods, and the control plane, is outside of the enclave and untrusted. This division is carefully considered to balance TCB size and sharing.

When trying to combine confidential computing and cloud native computing, often the first thing that comes to mind is either to put just one container inside of an enclave, or to put an entire worker node inside of the an enclave. This is known as container-centeric virtualization or node-centric virtualization. Confidential Containers opts for a compromise between these approaches which avoids some of their pitfalls. Specifically, node-centric approaches tend to have a large TCB that includes components such as the Kubelet. This makes the attack surface of the confidential guest significantly larger. It is also difficult to implement managed clusters in node-centric approaches because the workload runs in the same context as the rest of the cluster. On the other hand, container-centric approaches can support very little sharing of resources. Sharing is a loose term, but one example is two containers that need to share information over the network. In a container-centric approach this traffic would leave the enclave. Protecting the traffic would add overhead and complexity.

Confidential Containers takes a pod-centric approach which balances TCB size and sharing. While Confidential Containers does have some daemons and processes inside the enclave, the API of the guest is relatively small. Furthermore the guest image is static and generic across workloads and even platforms, making it simpler to ensure security guarantees. At the same time, sharing between containers in the same pod is easy. For example, the pod network namespace doesn’t leave the enclave, so containers can communicate confidentially on it without additional overhead. These are just a few of the reasons why pod-centric virtualization seems to be the best way to provide confidential cloud native computing.

Kata Containers

Confidential Containers and Kata Containers are closely linked, but the relationship might not be obvious at first. Kata Containers is an existing open source project that encapsulates pods inside of VMs. Given the pod-centric design of Confidential Containers this is a perfect match. But if Kata runs pods inside of VM, why do we need the Confidential Containers project at all? There are crucial changes needed on top of Kata Containers to preserve confidentiality.

Image Pulling

When using Kata Containers container images are pulled on the worker node with the help of a CRI runtime like containerd.

The images are exposed to the guest via filesystem passthrough.

This is not suitable for confidential workloads because the container images are exposed to the untrusted host.

With Confidential Containers images are pulled and unpacked inside of the guest.

This requires additional components such as image-rs to be part of the guest rootfs.

These components are beyond the scope of traditional Kata deployments and live in the Confidential Containers

guest components repository.

On the host, we use a snapshotter to pre-empt image pull and divert control flow to image-rs inside the guest.

sequenceDiagram

kubelet->>containerd: create container

containerd->>nydus snapshotter: load container snapshot

nydus snapshotter->>image-rs: download container

kubelet->>containerd: start container

containerd->>kata shim: start container

kata shim->>kata agent: start container

The above is a simplified diagram showing the interaction of containerd, the nydus snapshotter, and image-rs. The diagram does not show the creation of the sandbox.

Attestation

Confidential Containers also provides components inside the guest and elsewhere to facilitate attestation.

Attestation is a crucial part of confidential computing and a direct requirement of many guest operations.

For example, to unpack an encrypted container image, the guest must retrieve a secret key.

Inside the guest the confidential-data-hub and attestation-agent handle operations involving

secrets and attestation.

Again, these components are beyond the scope of traditional Kata deployments and are located in the

guest components repository.

The CDH and AA use the KBS Protocol to communicate with an external, trusted entity. Confidential Containers provides Trustee as an attestation service and key management engine that validates the guest TCB and releases secret resources.

sequenceDiagram

workload->>CDH: request secret

CDH->>AA: get attestation token

AA->>KBS: attestation request

KBS->>AA: challenge

AA->>KBS: attestation

KBS->>AA: attestation token

AA->>CDH: attestation token

CDH->>KBS: secret request

KBS->>CDH: encrypted secret

CDH->>workload: secret

The above is a somewhat simplified diagram of the attestation process. The diagram does not show the details of how the workload interacts with the CDH.

Putting the pieces together

If we take Kata Containers and add guest image pulling and attestation, we arrive at the following diagram, which represents Confidential Containers.

flowchart TD

kubelet-->containerd

containerd-->kata-shim

kata-shim-->hypervisor

containerd-->nydus-snapshotter

subgraph guest

kata-agent-->pod

ASR-->CDH

CDH<-->AA

pod-->ASR

image-rs-->pod

image-rs-->CDH

end

subgraph pod

container-a

container-b

container-c

end

subgraph Trustee

KBS-->AS

AS-->RVPS

end

AA-->KBS

CDH-->KBS

nydus-snapshotter-->image-rs

hypervisor-->guest

kata-shim<-->kata-agent

image-rs<-->registry

Clouds and Nesting

Most confidential computing hardware does not support nesting. More specifically, a confidential guest cannot be started inside of a confidential guest, and with few exceptions a confidential guest cannot be started inside of a non-confidential guest. This poses a challenge for those who do not have access to bare metal machines or would like to have virtual worker nodes.

To alleviate this, Confidential Containers supports a deployment mode known as Peer Pods, where a component called the Cloud API Adaptor takes the place of a conventional hypervisor. Rather than starting a confidential PodVM locally, the CAA reaches out to a cloud API. Since the PodVM is no longer started locally the worker node can be virtualized. This also allows confidential containers to integrate with cloud confidential VM offerings.

Peer Pods deployments share most of the same properties that are described in this guide.

Process-based Isolation

Confidential Containers also supports SGX with enclave-cc. Because the Kata guest cannot be run as a single process, the design of enclave-cc is significantly different. In fact, enclave-cc doesn’t use Kata at all, but it does still represent a pod-centric approach with some sharing between containers even as they run in separate enclaves. enclave-cc does use some of the guest components as crates.

Components

Confidential Containers integrates many components. Here is a brief overview of most the components related to the project.

| Component | Repository | Purpose |

|---|---|---|

| Operator | operator | Installs Confidential Containers |

| Kata Shim | kata-containers/kata-containers | Starts PodVM and proxies requests to Kata Agent |

| Kata Agent | kata-containers/kata-containers | Sets up and runs the workload inside of a VM |

| image-rs | guest-components | Downloads and unpacks container images |

| ocicrypt-rs | guest-components | Decrypts encrypted container layers |

| confidential-data-hub | guest-components | Handles secret resources |

| attestation-agent | guest-components | Attests guest |

| api-server-rest | guest-components | Proxies requests from workload container to CDH |

| key-broker-service | Trustee | Coordinates attestation and secret delivery (relying party) |

| attestation-service | Trustee | Validate hardware evidence (verifier) |

| reference-value-provider-service | Trustee | Manages reference values |

| Nydus Snapshotter | containerd/nydus-snapshotter | Triggers guest image pulling |

| cloud-api-adaptor | cloud-api-adaptor | Starts PodVM in the cloud |

| agent-protocol-forwarder | cloud-api-adaptor | Forwards Kata Agent API from cloud API |

Component Dependencies

Many of the above components depend on each other either directly in the source, during packaging, or at runtime. The basic premise is that the operator deploys a special configuration of Kata containers that uses a rootfs (built by the Kata CI) that includes the guest components. This diagram shows these relationships in more detail. The diagram does not capture runtime interactions.

flowchart LR

Trustee --> Versions.yaml

Guest-Components --> Versions.yaml

Kata --> kustomization.yaml

Guest-Components .-> Client-tool

Guest-Components --> enclave-agent

enclave-cc --> kustomization.yaml

Guest-Components --> versions.yaml

Trustee --> versions.yaml

Kata --> versions.yaml

subgraph Kata

Versions.yaml

end

subgraph Guest-Components

end

subgraph Trustee

Client-tool

end

subgraph enclave-cc

enclave-agent

end

subgraph Operator

kustomization.yaml

reqs-deploy

end

subgraph cloud-api-adaptor

versions.yaml

end

Workloads

Confidential Containers provides a set of primitives for building confidential Cloud Native applications. For instance, it allows a pod to be run inside of a confidential VM, it handles encrypted and signed container image, sealed secrets, and other features described in the features section. This does not guarantee that any application run with Confidential Containers is confidential or secure. Users deploying applications with Confidential Containers should understand the attack surface and security applications of their workloads, focusing especially on APIs that cross the confidential trust boundary.

3.2 - Trust Model

3.2.1 - Trust Model for Confidential Containers

Confidential Containers mainly relies on VM enclaves, where the guest does not trust the host. Confidential computing, and by extension Confidential Containers, provides technical assurances that the untrusted host cannot access guest data or manipulate guest control flow.

Trusted

Confidential Containers maps pods to confidential VMs, meaning that everything inside a pod is

within an enclave. In addition to the workload pod, the guest also contains helper processes

and daemons to setup and control the pod.

These include the kata-agent and guest components as described in the architecture section.

More specifically, the guest is defined as four components.

- Guest firmware

- Guest kernel

- Guest kernel command line

- Guest root filesystem

All platforms supported by Confidential Containers must measure these four components. Details about the mechanisms for each platform are below.

Note that the hardware measurement usually does not directly cover the workload containers. Instead, containers are covered by a second-stage of measurement that uses generic OCI standards such as signing. This second stage of measurement is rooted in the trust of the first stage, but decoupled from the guest image.

Confidential Containers also relies on an external trusted entity, usually Trustee, to attest the guest.

Untrusted

Everything on the host outside of the enclave is untrusted. This includes the Kubelet, CRI runtimes like containerd, the host kernel, the Kata Shim, and more.

Since the Kubernetes control plane is untrusted, some traditional Kubernetes security techniques are not relevant to Confidential Containers without special considerations.

Crossing the trust boundary

In confidential computing careful scrutiny is required whenever information crosses the boundary between the trusted and untrusted contexts. Secrets should not leave the enclave without protection and entities outside of the enclave should not be able to trigger malicious behavior inside the guest.

In Confidential Containers there are APIs that cross the trust boundary. The main example is the API between the Kata Agent in the guest and the Kata Shim on the host. This API is protected with an OPA policy running inside the guest that can block malicious requests by the host.

Note that the kernel command line, which is used to configure the Kata Agent, does not cross the trust boundary because it is measured at boot. Assuming that the guest measurement is validated, the APIs that are most significant are those that are not measured by the hardware.

Quantifying the attack surface of an API is non-trivial. The Kata Agent can perform complex operations such as mounting a block device provided by the host. In the case that a host-provided device is attached to the guest the attack surface is extended to any information provided by this device. It’s also possible that any of the code used to implement the API inside the guest has a bug in it. As the complexity of the API increases, the likelihood of a bug increases. The nuances of the Kata Agent API is why Confidential Containers relies on a dynamic and user-configurable policy to either block endpoints entirely or only allow particular types of requests to be made. For example, the policy can be used to make sure that a block device is mounted only to a particular location.

Applications deployed with Confidential Containers should also be aware of the trust boundary. An application running inside of an enclave is not secure if it exposes a dangerous API to the outside world. Confidential applications should almost always be deployed with signed and/or encrypted images. Otherwise the container image itself can be considered as part of the unmeasured API.

Out of Scope

Some attack vectors are out of scope of confidential computing and Confidential Containers. For instance, confidential computing platforms usually do not protect against hardware side-channels. Neither does Confidential Containers. Different hardware platforms and platform generations may have different guarantees regarding properties like memory integrity. Confidential Containers inherits the properties of whatever TEE it is using.

Confidential computing does not protect against denial of service. Since the untrusted host is in charge of scheduling, it can simply not run the guest. This is true for Confidential Containers as well. In Confidential Containers the untrusted host can avoid scheduling the pod VM and the untrusted control plane can avoid scheduling the pod. These are seen as equivalent.

In general orchestration is untrusted in Confidential Containers. Confidential Containers provides few guarantees about where, when, or in what order workloads run, besides that the workload is deployed inside of a genuine enclave containing the expected software stack.

Cloud Native Personas

So far the trust model has been described in terms of a host and a guest, following from the underlying confidential computing trust model, but these terms are not used in cloud native computing. How do we understand the trust model in terms of cloud native personas? Confidential Containers is a flexible project. It does not explicitly define how parties should interact. but some possible arrangements are described in the personas section.

Measurement Details

As mentioned above, all hardware platforms must measure the four components representing the guest image. This table describes how each platform does this.

| Platform | Firmware | Kernel | Command Line | Rootfs |

|---|---|---|---|---|

| SEV-SNP | Pre-measured by ASP | Measured direct boot via OVMF | Measured direct boot | Measured direct boot |

| TDX | Pre-launch measurement | RTMR | RTMR | Dm-verity hash provided in command line |

| SE | Included in encrypted SE image | included in SE image | included in SE image | included in SE image |

See Also

- Confidential Computing Consortium (CCC) published “A Technical Analysis of Confidential Computing” section 5 of which defines the threat model for confidential computing.

- CNCF Security Technical Advisory Group published “Cloud Native Security Whitepaper”

- Kubernetes provides documentation : “Overview of Cloud Native Security”

- Open Web Application Security Project - “Docker Security Threat Modeling”

3.2.2 - Personas

Personas

Otherwise referred to as actors or agents, these are individuals or groups capable of carrying out a particular threat. In identifying personas we consider :

- The Runtime Environment, Figure 5, Page 19 of CNCF Cloud Native Security Paper. This highlights three layers, Cloud/Environment, Workload Orchestration, Application.

- The Kubernetes Overview of Cloud Native Security identifies the 4C’s of Cloud Native Security as Cloud, Cluster, Container and Code. However data is core to confidential containers rather than code.

- The Confidential Computing Consortium paper A Technical Analysis of Confidential Computing defines Confidential Computing as the protection of data in use by performing computations in a hardware-based Trusted Execution Environment (TEE).

In considering personas we recognise that a trust boundary exists between each persona and we explore how the least privilege principle (as described on Page 40 of Cloud Native Security Paper ) should apply to any actions which cross these boundaries.

Confidential containers can provide enhancements to ensure that the expected code/containers are the only code that can operate over the data. However any vulnerabilities within this code are not mitigated by using confidential containers, the Cloud Native Security Whitepaper details Lifecycle aspects that relate to the security of the code being placed into containers such as Static/Dynamic Analysis, Security Tests, Code Review etc which must still be followed.

Any of these personas could attempt to perform malicious actions:

Infrastructure Operator

This persona has privileges within the Cloud Infrastructure which includes the hardware and firmware used to provide compute, network and storage to the Cloud Native solution. They are responsible for availability of infrastructure used by the cloud native environment.

- Have access to the physical hardware.

- Have access to the processes involved in the deployment of compute/storage/memory used by any orchestration components and by the workload.

- Have control over TEE hardware availability/type.

- Responsibility for applying firmware updates to infrastructure including the TEE Technology.

Examples: Cloud Service Provider (CSP), Site Reliability Engineer, etc. (SRE)

Orchestration Operator

This persona has privileges within the Orchestration/Cluster. They are responsible for deploying a solution into a particular cloud native environment and managing the orchestration environment. For managed cluster this would also include the administration of the cluster control plane.

- Control availability of service.

- Control webhooks and deployment of workloads.

- Control availability of cluster resources (data/networking/storage) and cluster services (Logging/Monitoring/Load Balancing) for the workloads.

- Control the deployment of runtime artifacts required by the TEE during initialisation, before hosting the confidential workload.

Example: A Kubernetes administrator responsible for deploying pods to a cluster and maintaining the cluster.

Workload Provider

This persona designs and creates the orchestration objects comprising the solution (e.g. Kubernetes Pod spec, etc). These objects reference containers published by Container Image Providers. In some cases the Workload and Container Image Providers may be the same entity. The solution defined is intended to provide the Application or Workload which in turn provides value to the Data Owners (customers and clients). The Workload Provider and Data Owner could be part of same company/organisation but following the least privilege principle the Workload Provider should not be able to view or manipulate end user data without informed consent.

- Need to prove to customer aspects of compliance.

- Defines what the solution requires in order to run and maintain compliance (resources, utility containers/services, storage).

- Chooses the method of verifying the container images (from those supported by Container Image Provider) and obtains artifacts needed to allow verification to be completed within the TEE.

- Provide the boot images initially required by the TEE during initialisation or designates a trusted party to do so.

- Provide the attestation verification service, or designate a trusted party to provide the attestation verification service.

Examples: 3rd party software vendor, CSP

Container Image Provider

This persona is responsible for the part of the supply chain that builds container images and provides them for use by the solution. Since a workload can be composed of multiple containers, there may be multiple container image providers, some will be closely connected to the workload provider (business logic containers), others more independent to the workload provider (side car containers). The container image provider is expected to use a mechanism to allow provenance of container image to be established when a workload pulls in these images at deployment time. This can take the form of signing or encrypting the container images.

- Builds container images.

- Owner of business logic containers. These may contain proprietary algorithms, models or secrets.

- Signs or encrypts the images.

- Defines the methods available for verifying the container images to be used.

- Publishes the signature verification key (public key).

- Provides any decryption keys through a secure channel (generally to a key management system controlled by a Key Broker Service).

- Provides other required verification artifacts (secure channel may be considered).

- Protects the keys used to sign or encrypt the container images.

It is recognised that hybrid options exist surrounding workload provider and container provider. For example the workload provider may choose to protect their supply chain by signing/encrypting their own container images after following the build patterns already established by the container image provider.

Example : Istio

Data Owner

Owner of data used, and manipulated by the application.

- Concerned with visibility and integrity of their data.

- Concerned with compliance and protection of their data.

- Uses and shares data with solutions.

- Wishes to ensure no visibility or manipulation of data is possible by Orchestration Operator or Cloud Operator personas.

Discussion

Data Owner vs. All Other Personas

The key trust relationship here is between the Data Owner and the other personas. The Data Owner trusts the code in the form of container images chosen by the Workload Provider to operate across their data, however they do not trust the Orchestration Operator or Cloud Operator with their data and wish to ensure data confidentiality.

Workload Provider vs. Container Image Provider

The Workload Provider is free to choose Container Image Providers that will provide not only the images they need but also support the verification method they require. A key aspect to this relationship is the Workload Provider applying Supply Chain Security practices (as described on Page 42 of Cloud Native Security Paper ) when considering Container Image Providers. So the Container Image Provider must support the Workload Providers ability to provide assurance to the Data Owner regarding integrity of the code.

With Confidential Containers we match the TEE boundary to the most restrictive boundary which is between the Workload Provider and the Orchestration Operator.

Orchestration Operator vs. Infrastructure Operator

Outside the TEE we distinguish between the Orchestration Operator and the Infrastructure Operator due to nature of how they can impact the TEE and the concerns of Workload Provider and Data Owner. Direct threats exist from the Orchestration Operator as some orchestration actions must be permitted to cross the TEE boundary otherwise orchestration cannot occur. A key goal is to deprivilege orchestration and restrict the Orchestration Operators privileges across the boundary. However indirect threats exist from the Infrastructure Operator who would not be permitted to exercise orchestration APIs but could exploit the low-level hardware or firmware capabilities to access or impact the contents of a TEE.

Workload Provider vs. Data Owner

Inside the TEE we need to be able to distinguish between the Workload Provider and Data Owner in recognition that the same workload (or parts such as logging/monitoring etc) can be re-used with different data sets to provide a service/solution. In the case of bespoke workload, the workload provider and Data Owner may be the same persona. As mentioned the Data Owner must have a level of trust in the Workload Provider to use and expose the data provided in an expected and approved manner. Page 10 of A Technical Analysis of Confidential Computing , suggests some approaches to establish trust between them.

The TEE boundary allows the introduction of secrets but just as we recognised the TEE does not provide protection from code vulnerabilities, we also recognised that a TEE cannot enforce complete distrust between Workload Provider and Data Owner. This means secrets within the TEE are at risk from both Workload Provider and Data Owner and trying to keep secrets which protect the workload (container encryption etc), separated from secrets to protect the data (data encryption) is not provided simply by using a TEE.

Recognising that Data Owner and Workload Provider are separate personas helps us to identify threats to both data and workload independently and to recognise that any solution must consider the potential independent nature of these personas. Two examples of trust between Data Owner and Workload Provider are :

- AI Models which are proprietary and protected requires the workload to be encrypted and not shared with the Data Owner. In this case secrets private to the Workload Provider are needed to access the workload, secrets requiring access to the data are provided by the Data Owner while trusting the workload/model without having direct access to how the workload functions. The Data Owner completely trusts the workload and Workload Provider, whereas the Workload Provider does not trust the Data Owner with the full details of their workload.

- Data Owner verifies and approves certain versions of a workload, the workload provides the data owner with secrets in order to fulfil this. These secrets are available in the TEE for use by the Data Owner to verify the workload, once achieved the data owner will then provide secrets and data into the TEE for use by the workload in full confidence of what the workload will do with their data. The Data Owner will independently verify versions of the workload and will only trust specific versions of the workload with the data whereas the Workload Provider completely trusts the Data Owner.

Data Owner vs. End User

We do not draw a distinction between data owner and end user though we do recognise that in some cases these may not be identical. For example data may be provided to a workload to allow analysis and results to be made available to an end user. The original data is never provided directly to the end user but the derived data is, in this case the data owner can be different from the end user and may wish to protect this data from the end user.

4 - Features

In addition to running pods inside of enclaves, Confidential Containers provides several other features that can be used to protect workloads and data. Securing complex workloads often requires using some of these features.

Most features depend on and require attestation, which is described in the next section.

4.1 - Get Attestation

Warning

This feature is disabled by default due to some subtle security considerations which are described below.Workloads can directly request attestation evidence. A workload could use this evidence to carry out its own attestation protocol.

Enabling

To enable this feature, set the following parameter in the guest kernel command line.

agent.guest_components_rest_api=all

As usual, command line configurations can be added with annotations.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

annotations:

io.katacontainers.config.hypervisor.kernel_params: "agent.guest_components_rest_api=all"

spec:

runtimeClassName: (...)

containers:

- name: nginx

(...)

Attestation

Once enabled, an attestation can be retrieved via the REST API.

curl http://127.0.0.1:8006/aa/evidence?runtime_data=xxxx

The API expects runtime data to be provided. The runtime data will be included in the report. Typically this is used to bind a nonce or the hash of a key to the evidence.

Security

Enabling this feature can make your workload vulnerable to so-called “evidence factory” attacks. By default, different CoCo workloads can have the same TCB, even if they use different container images. This is a design feature, but it means that when the attestation report API is enabled, two workloads could produce interchangeable attestation reports, even if they are operated by different parties.

To make sure someone else cannot generate evidence that could be used with your attestation protocol, configure your guest to differentiate its TCB. For example, use the init-data (which is measured) to specify the public key of the KBS. Init-data is currently only supported with peer pods. More information about it will be added soon.

4.2 - Get Secret Resources

Note

Requesting secret resources depends on attestation. Configure attestation before requesting resourcesWhile sealed secrets can be used to store encrypted secret resources as Kubernetes Secrets and have them transparently decrypted using keys from Trustee, a workload can also explicitly request resources via a REST API that is automatically exposed to the pod network namespace.

For example, you can run this command from your container.

curl http://127.0.0.1:8006/cdh/resource/default/key/1

In this example Trustee will fulfill the request for kbs:///default/key/1

assuming that the resource has been provisioned.

4.3 - Authenticated Registries

Context

A user might want to use container images from private OCI registries, hence requiring authentication. The project provides the means to pull protected images from authenticated registry.

Important: ideally the authentication credentials should only be accessible from within a Trusted Execution Environment, however, due some limitations on the architecture of components used by CoCo, the credentials need to be exposed to the host, thus registry authentication is not currently a confidential feature. The community has worked to remediate that limitation and, in meanwhile, we recommend the use of encrypted images as a mitigation.

Instructions

The following steps require a functional CoCo installation on a Kubernetes cluster. A Key Broker Client (KBC) has to be configured for TEEs to be able to retrieve confidential secrets. We assume cc_kbc as a KBC for the CoCo project’s Key Broker Service (KBS) in the following instructions, but authenticated registries should work with other Key Broker implementations in a similar fashion.

Create registry authentication file

The registry authentication file should have the containers-auth.json format, with exception of credential helpers (credHelpers) that aren’t supported. Also it’s not supported glob URLs nor prefix-matched paths as in

Kubernetes interpretation of config.json.

Create the registry authentication file (e.g containers-auth.json) like this:

export AUTHENTICATED_IMAGE="my-registry.local/repository/image:latest"

export AUTHENTICATED_IMAGE_NAMESPACE="$(echo "$AUTHENTICATED_IMAGE" | cut -d':' -f1)"

export AUTHENTICATED_IMAGE_USER="MyRegistryUser"

export AUTHENTICATED_IMAGE_PASSWORD="MyRegistryPassword"

cat <<EOF>> containers-auth.json

{

"auths": {

"${AUTHENTICATED_IMAGE_NAMESPACE}": {

"auth": "$(echo ${AUTHENTICATED_IMAGE_USER}:${AUTHENTICATED_IMAGE_PASSWORD} | base64 -w 0)"

}

}

}

EOF

Where:

AUTHENTICATED_IMAGEis the full-qualified image nameAUTHENTICATED_IMAGE_NAMESPACEis the image name without the tagAUTHENTICATED_IMAGE_USERandAUTHENTICATED_IMAGE_PASSWORDare the registry credentials user and password, respectivelyauth’s value is the colon-separated user and password (user:password) credentials string encoded in base64

Provision the registry authentication file

Prior to launching a Pod the registry authentication file needs to be provisioned to the Key Broker’s repository. For a KBS deployment on Kubernetes using the local filesystem as repository storage it would work like this:

export KEY_PATH="default/containers/auth"

kubectl exec deploy/kbs -c kbs -n coco-tenant -- mkdir -p "/opt/confidential-containers/kbs/repository/$(dirname "$KEY_PATH")"

cat containers-auth.json | kubectl exec -i deploy/kbs -c kbs -n coco-tenant -- tee "/opt/confidential-containers/kbs/repository/${KEY_PATH}" > /dev/null

The CoCo infrastructure components need to cooperate with containerd and nydus-snapshotter to pull the container image from TEE. Currently

the nydus-snapshotter needs to fetch the image’s metadata from registry, then authentication credentials are read from a Kubernetes secret

of docker-registry type. So it should be created a secret like this:

export SECRET_NAME="cococred"

kubectl create secret docker-registry "${SECRET_NAME}" --docker-server="https://${AUTHENTICATED_IMAGE_NAMESPACE}" \

--docker-username="${AUTHENTICATED_IMAGE_USER}" --docker-password="${AUTHENTICATED_IMAGE_PASSWORD}"

Where:

SECRET_NAMEis any secret name

Launch a Pod

Create the pod yaml (e.g. pod-image-auth.yaml) like below and apply it:

export KBS_ADDRESS="172.18.0.3:31731"

export RUNTIMECLASS="kata-qemu-coco-dev"

cat <<EOF>> pod-image-auth.yaml

apiVersion: v1

kind: Pod

metadata:

name: image-auth-feat

annotations:

io.containerd.cri.runtime-handler: ${RUNTIMECLASS}

io.katacontainers.config.hypervisor.kernel_params: ' agent.image_registry_auth=kbs:///${KEY_PATH} agent.guest_components_rest_api=resource agent.aa_kbc_params=cc_kbc::http://${KBS_ADDRESS}'

spec:

runtimeClassName: ${RUNTIMECLASS}

containers:

- name: test-container

image: ${AUTHENTICATED_IMAGE}

imagePullPolicy: Always

command:

- sleep

- infinity

imagePullSecrets:

- name: ${SECRET_NAME}

EOF

Where:

KBS_ADDRESSis thehost:portaddress of KBSRUNTIMECLASSis any of available CoCo runtimeclasses (e.g.kata-qemu-tdx,kata-qemu-snp). For this example,kata-qemu-coco-devallows to create CoCo pod on systems without confidential hardware. It should be replaced with a class matching the TEE in use.

What distinguish the pod specification for authenticated registry from a regular CoCo pod is:

- the

agent.image_registry_authproperty inio.katacontainers.config.hypervisor.kernel_paramsannotation indicates the location of the registry authentication file as a resource in the KBS - the

imagePullSecretsas required by nydus-snapshotter

Check the pod gets Running:

kubectl get -f pod-image-auth.yaml

NAME READY STATUS RESTARTS AGE

image-auth-feat 1/1 Running 0 2m52s

4.4 - Encrypted Images

Context

A user might want to bundle sensitive data on an OCI (Docker) image. The image layers should only be accessible within a Trusted Execution Environment (TEE).

The project provides the means to encrypt an image with a symmetric key that is released to the TEE only after successful verification and appraisal in a Remote Attestation process. CoCo infrastructure components within the TEE will transparently decrypt the image layers as they are pulled from a registry without exposing the decrypted data outside the boundaries of the TEE.

Instructions

The following steps require a functional CoCo installation on a Kubernetes cluster. A Key Broker Client (KBC) has to be configured for TEEs to be able to retrieve confidential secrets. We assume cc_kbc as a KBC for the CoCo project’s Key Broker Service (KBS) in the following instructions, but image encryption should work with other Key Broker implementations in a similar fashion.

Note

Please ensure you have a recent version of Skopeo (v1.13.3+) installed locally.Encrypt an image

We extend public image with secret data.

docker build -t unencrypted - <<EOF

FROM nginx:stable

RUN echo "something confidential" > /secret

EOF

The encryption key needs to be a 32 byte sequence and provided to the encryption step as base64-encoded string.

KEY_FILE="image_key"

head -c 32 /dev/urandom | openssl enc > "$KEY_FILE"

KEY_B64="$(base64 < $KEY_FILE)"

The key id is a generic resource descriptor used by the key broker to look up secrets in its storage. For KBS this is composed of three segments: $repository_name/$resource_type/$resource_tag

KEY_PATH="/default/image_key/nginx"

KEY_ID="kbs://${KEY_PATH}"

The image encryption logic is bundled and invoked in a container:

git clone https://github.com/confidential-containers/guest-components.git

cd guest-components

docker build -t coco-keyprovider -f ./attestation-agent/docker/Dockerfile.keyprovider .

To access the image from within the container, Skopeo can be used to buffer the image in a directory, which is then made available to the container. Similarly, the resulting encrypted image will be put into an output directory.

mkdir -p oci/{input,output}

skopeo copy docker-daemon:unencrypted:latest dir:./oci/input

docker run -v "${PWD}/oci:/oci" coco-keyprovider /encrypt.sh -k "$KEY_B64" -i "$KEY_ID" -s dir:/oci/input -d dir:/oci/output

We can inspect layer annotations to confirm the expected encryption was applied:

skopeo inspect dir:./oci/output | jq '.LayersData[0].Annotations["org.opencontainers.image.enc.keys.provider.attestation-agent"] | @base64d | fromjson'

Sample output:

{

"kid": "kbs:///default/image_key/nginx",

"wrapped_data": "lGaLf2Ge5bwYXHO2g2riJRXyr5a2zrhiXLQnOzZ1LKEQ4ePyE8bWi1GswfBNFkZdd2Abvbvn17XzpOoQETmYPqde0oaYAqVTMcnzTlgdYYzpWZcb3X0ymf9bS0gmMkqO3dPH+Jf4axXuic+ITOKy7MfSVGTLzay6jH/PnSc5TJ2WuUJY2rRtNaTY65kKF2K9YP6mtYBqcHqvPDlFiVNNeTAGv2w1zwaMlgZaSHV+Z1y+xxbOV5e98bxuo6861rMchjCiE7FY37PHD3a5ISogq90=",

"iv": "Z8bGQL7r6qxSpd4L",

"wrap_type": "A256GCM"

}

Finally, the resulting encrypted image can be provisioned to an image registry.

ENCRYPTED_IMAGE=some-private.registry.io/coco/nginx:encrypted

skopeo copy dir:./oci/output "docker://${ENCRYPTED_IMAGE}"

Provision image key

Prior to launching a Pod the image key needs to be provisioned to the Key Broker’s repository. For a KBS deployment on Kubernetes using the local filesystem as repository storage it would work like this:

kubectl exec deploy/kbs -- mkdir -p "/opt/confidential-containers/kbs/repository/$(dirname "$KEY_PATH")"

cat "$KEY_FILE" | kubectl exec -i deploy/kbs -- tee "/opt/confidential-containers/kbs/repository/${KEY_PATH}" > /dev/null

Note: If you’re not using KBS deployment using trustee operator additional namespace may be needed

-n coco-tenant.

Launch a Pod

We create a simple deployment using our encrypted image. As the image is being pulled and the CoCo components in the TEE encounter the layer annotations that we saw above, the image key will be retrieved from the Key Broker using the annotated Key ID and the layers will be decrypted transparently and the container should come up.

In this example we default to the Cloud API Adaptor runtime, adjust this depending on the CoCo installation.

kubectl get runtimeclass -o jsonpath='{.items[].handler}'

Sample output:

kata-remote

Export variable:

CC_RUNTIMECLASS=kata-remote

Export KBS address:

KBS_ADDRESS=scheme://host:port

Deploy sample pod:

cat <<EOF> nginx-encrypted.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx-encrypted

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

annotations:

io.katacontainers.config.hypervisor.kernel_params: "agent.aa_kbc_params=cc_kbc::${KBS_ADDRESS}"

io.containerd.cri.runtime-handler: ${CC_RUNTIMECLASS}

spec:

runtimeClassName: ${CC_RUNTIMECLASS}

containers:

- image: ${ENCRYPTED_IMAGE}

name: nginx

imagePullPolicy: Always

EOF

kubectl apply -f nginx-encrypted.yaml

-

Create file

$HOME/initdata.tomlcat <<EOF> initdata.toml algorithm = "sha256" version = "0.1.1" [data] "aa.toml" = ''' [token_configs] [token_configs.coco_as] url = '${KBS_ADDRESS}' [token_configs.kbs] url = '${KBS_ADDRESS}' ''' "cdh.toml" = ''' socket = 'unix:///run/confidential-containers/cdh.sock' credentials = [] [kbc] name = 'cc_kbc' url = '${KBS_ADDRESS}' ''' EOF -

Export variable:

INIT_DATA_B64=$(cat $HOME/initdata.toml | gzip | base64 -w0) -

Deploy:

cat <<EOF> nginx-encrypted.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: app: nginx name: nginx-encrypted spec: replicas: 1 selector: matchLabels: app: nginx template: metadata: labels: app: nginx annotations: io.katacontainers.config.runtime.cc_init_data: "${INIT_DATA_B64}" io.containerd.cri.runtime-handler: ${CC_RUNTIMECLASS} spec: runtimeClassName: ${CC_RUNTIMECLASS} containers: - image: ${ENCRYPTED_IMAGE} name: nginx imagePullPolicy: Always EOF kubectl apply -f nginx-encrypted.yaml

We can confirm that the image key has been retrieved from KBS.

kubectl logs -f deploy/kbs | grep "$KEY_PATH"

[2024-01-23T10:24:52Z INFO actix_web::middleware::logger] 10.244.0.1 "GET /kbs/v0/resource/default/image_key/nginx HTTP/1.1" 200 530 "-" "attestation-agent-kbs-client/0.1.0" 0.000670

Note: If you’re not using KBS deployment using trustee operator additional namespace may be needed

-n coco-tenant.

Debugging

The encrypted image feature relies on a cascade of other features as building blocks:

- Image decryption is built on secret retrieval from KBS

- Secret retrieval is built on remote attestation

- Remote attestation requires retrieval of hardware evidence from the TEE

All of the above have to work in order to decrypt an encrypted image.

0. Launching with an unencrypted image

Launch the same image with unencrypted layers from the same registry to verify that the image itself is not an issue.

1. Retrieve hardware evidence from TEE

Launch an unencrypted library/nginx deployment named nginx with a CoCo runtime class. Issue kubectl exec deploy/nginx -- curl http://127.0.0.1:8006/aa/evidence\?runtime_data\=xxxx. This should produce a real hardware evidence rendered as JSON. Something like {"svn":"1","report_data":"eHh4eA=="} is not real hardware evidence, it’s a dummy response from the sample attester. Real TEE evidence should be more verbose and contain certificates and measurements.

The reason for not producing real evidence could be a wrong build of Attestation Agent for the TEE that you are attempting to use, or the Confidential VM not exposing the expected interfaces.

Note: In some configurations of the CoCo image the facility to retrieve evidence is disabled by default. For bare-metal CoCo images you can enable it by setting agent.guest_components_rest_api=all on the kernel cmdline (see here).

2. Perform remote attestation

Run kubectl exec deploy/nginx -- curl http://127.0.0.1:8006/aa/token\?token_type\=kbs. This should produce an attestation token. If you don’t receive a token but an error you should inspect your Trustee KBS/AS logs and see whether there was a connection attempt for the CVM and potentially a reason why remote attestation failed.

You can set RUST_LOG=debug as environment variable the Trustee deployment to receive more verbose logs. If the evidence is being sent to KBS, the issue is most likely resolvable on the KBS side and possibly related to the evidence not meeting the expectations for a key release in KBS/AS policies or the requested resource not being available in the KBS.

If you don’t see an attestation attempt in the log of KBS there might be problems with network connectivity between the Confidential VM and the KBS. Note that the Pod and the Guest Components on the CVM might not share the same network namespace. A Pod might be able to reach KBS, but the Attestation Agent on the Confidential VM might not. If you are using HTTPS to reach the KBS, there might be a problem with the certificate provided to the Confidential VM (e.g via Initdata).

3. Retrieve decryption key from KBS

If you have successfully retrieved a token attempt to fetch the symmetric key for the encrypted image, manually and using the image’s key id (kid): kubectl exec deploy/nginx -- curl http://127.0.0.1:8006/cdh/resource/default/images/my-key.

You can find out the kid for a given encrypted image in the a query like this:

$ ANNOTATION="org.opencontainers.image.enc.keys.provider.attestation-agent"

$ skopeo inspect docker://ghcr.io/mkulke/nginx-encrypted@sha256:5a81641ff9363a63c3f0a1417d29b527ff6e155206a720239360cc6c0722696e \

| jq --arg ann "$ANNOTATION" -r '.LayersData[0].Annotations[$ann] | @base64d | fromjson | .kid'

kbs:///default/image_key/nginx

If the key can be retrieved successfully, verify that the size is exactly 32 bytes and matches the key you used for encrypting the image.

4. Other (desperate) measures

If pulling an encrypted image still doesn’t work after successful retrieval of its encryption key, you might want to purge the node(s). There are bugs in the containerd remote snapshotter implementation that might taint the node with manifests and layers that will interfere with image pulling. The node should be discarded and reinstalled to rule that out.

It might also help to set annotations.io.containerd.cri.runtime-handler: $your-runtime-class in the pod spec, in addition to the runtimeClassName field and ImagePullPolicy: Always to ensure that the image is always pulled from the registry.

4.5 - Local Registries

TODO

4.6 - Protected Storage

TODO

4.7 - Sealed Secrets

Note

Sealed Secrets depend on attestation. Configure attestation before using sealed secrets.Sealed secrets allow confidential information to be stored in the untrusted control plane. Like normal Kubernetes secrets, sealed secrets are orchestrated by the control plane and are transparently provisioned to your workload as environment variables or volumes.

Basic Usage

Here’s how you create a vault secret. There are also envelope secrets, which are described later. Vault secrets are a pointer to resource stored in a KBS, while envelope secrets are wrapped secrets that are unwrapped with a KMS.

Creating a sealed secret

There is a helper tool for sealed secrets in the Guest Components repository.

Clone the repository.

git clone https://github.com/confidential-containers/guest-components.git

Inside the guest-components directory, you can build and run the tool with Cargo.

cargo run -p confidential-data-hub --bin secret

With the tool you can create a secret.

cargo run -p confidential-data-hub --bin secret seal vault --resource-uri kbs:///your/secret/here --provider kbs

A vault secret is fulfilled by retrieving a secret from a KBS inside the guest.

The locator of your secret is specified by resource-uri.

This command should return a base64 string which you will use in the next step.

Note

For vault secrets, the secret-cli tool does not upload your resource to the KBS automatically. In addition to generating the secret string, you must also upload the resource to your KBS.Adding a sealed secret to Kubernetes

Create a secret from your secret string using kubectl.

kubectl create secret generic sealed-secret --from-literal='secret=sealed.fakejwsheader.ewogICAgInZlcnNpb24iOiAiMC4xLjAiLAogICAgInR5cGUiOiAidmF1bHQiLAogICAgIm5hbWUiOiAia2JzOi8vL2RlZmF1bHQvc2VhbGVkLXNlY3JldC90ZXN0IiwKICAgICJwcm92aWRlciI6ICJrYnMiLAogICAgInByb3ZpZGVyX3NldHRpbmdzIjoge30sCiAgICAiYW5ub3RhdGlvbnMiOiB7fQp9Cg==.fakesignature'

Note

Sealed secrets do not currently support integrity protection. This will be added in the future, but for now a fake signature and signature header are included within the secret.When using --from-literal you provide a mapping of secret keys and values.

The secret value should be the string generated in the previous step.

The secret key can be whatever you want, but make sure to use the same one in future steps.

This is separate from the name of the secret.

Deploying a sealed secret to a confidential workload

You can add your sealed secret to a workload yaml file.

You can expose your sealed secret as an environment variable.

apiVersion: v1

kind: Pod

metadata:

name: sealed-secret-pod

spec:

runtimeClassName: kata-qemu-coco-dev

containers:

- name: busybox

image: quay.io/prometheus/busybox:latest

imagePullPolicy: Always

command: ["echo", "$PROTECTED_SECRET"]

env:

- name: PROTECTED_SECRET

valueFrom:

secretKeyRef:

name: sealed-secret

key: secret

You can also expose your secret as a volume.

apiVersion: v1

kind: Pod

metadata:

name: secret-test-pod-cc

spec:

runtimeClassName: kata

containers:

- name: busybox

image: quay.io/prometheus/busybox:latest

imagePullPolicy: Always

command: ["cat", "/sealed/secret-value/secret"]

volumeMounts:

- name: sealed-secret-volume

mountPath: "/sealed/secret-value"

volumes:

- name: sealed-secret-volume

secret:

secretName: sealed-secret

Note

Currently sealed secret volumes must be mounted in the/sealed directory.

Advanced

Envelope Secrets

You can also create envelope secrets. With envelope secrets, the secret itself is included in the secret (unlike a vault secret, which is just a pointer to a secret). In an envelope secret, the secret value is wrapped and can be unwrapped by a KMS. This allows us to support models where the key for unwrapping secrets never leaves the KMS. It also decouples the secret from the KBS.

We currently support two KMSes for envelope secrets. See specific instructions for aliyun kms and eHSM.

4.8 - Signed Images

Overview

Encrypted images provide confidentiality, but they do not provide authenticity or integrity. Image signatures provide this additional property, preventing certain types of image tampering, for example.

In this brief guide, we show two tools that can be used to sign container images: cosign and skopeo. The skopeo tool can be used to create both cosign signatures or “simple signatures” (which leverage gpg keys). For our purposes, our skopeo examples will use the simple signing approach. In any case, the general approach is to

- Create keys for signing,

- Sign a newly tagged image, and

- Update the KBS with the public signature key and a security policy.

Creating an Image

Creating Keys

Create a keypair using one of two approaches, cosign or Simple Signing - gpg.

To generate a public-private keypair with cosign, provide your

COSIGN_PASSWORD and use the generate-key-pair action:

$ COSIGN_PASSWORD=just1testing2password3 cosign generate-key-pair

This will create the private and public keys: cosign.key and cosign.pub.

skopeo depends on gpg for a keypair.

To generate a keypair with gpg using default options, use --full-generate-key:

$ gpg --full-generate-key

There are several prompts. A user for test purposes could be:

Github Runner

git@runner.com

just1testing2password3

Then export it. The --export-secret-key option is sufficient for exporting

both the secret and public key. Example command:

$ gpg --export-secret-key F63DB2A1AB7C7F195F698C9ED9582CADF7FBCC5D > github-runner.keys

The keys can later be imported by gpg in a CI system using --batch to avoid

typing the password:

$ gpg --batch --import ./github-runner.keys

When automating CI or test workflows, you can place the password for the key in a plaintext file (when it is safe to do so):

echo just1testing2password3 > git-runner-password.txt

Signing the Image

Sign the image using one of two approaches, cosign or Simple Signing - skopeo.

Use the private key to sign an image.

In this example, we assume that there is a Dockerfile (your_dockerfile below)

for creating an image that you want signed. The workflow is to build the image,

push it to ghcr (which requires docker login), and sign it.

COCO_PKG=confidential-containers/test-container

docker build \

-t ghcr.io/$(COCO_PKG):cosign-sig \

-f <your_dockerfile> \

.

docker push ghcr.io/$(COCO_PKG):cosign-sig

cosign sign --key ./cosign.key ghcr.io/${COCO_PKG}:cosign-sig

Ensure you have a gpg key owned by the user signing the image. See the previous subsection for generating and importing gpg keys.

Sign the image. For example, the following command uses an insecure-policy

to sign a local image called confidential-containers/test-container. It uses

the unsigned tag, and in the process of signing it, creates a new

simple-signed tag.

In this example, the resulting image is pushed to ghcr, which requires docker login:

COCO_PKG=confidential-containers/test-container

skopeo \

copy \

--debug \

--insecure-policy \

--sign-by git@runner.com \

--sign-passphrase-file ./git-runner-password.txt \

docker-daemon:ghcr.io/${COCO_PKG}:unsiged \

docker://ghcr.io/${COCO_PKG}:simple-signed

Running an Image

Running a workload with a signed image is very similar to running workloads

with unsigned images. The main difference is that for a signed image, you must

provide some details to the KBS. This

security policy tells the KBS which image is signed, the type of its key

signature, and where to find the public key for verifying the signature.

Beyond this, you run the workload as you usually would (e.g. via kubectl apply).

Setting the Security Policy for Signed Images

Register the public key to KBS storage. For example:

mkdir -p ${KBS_DIR_PATH}/data/kbs-storage/default/cosign-key \

&& cp cosign.pub ${KBS_DIR_PATH}/data/kbs-storage/default/cosign-key/1

Edit an image pulling validation policy file.

Here is a sample policy file security-policy.json:

{

"default": [{"type": "reject"}],

"transports": {

"docker": {

"[REGISTRY_URL]": [

{

"type": "sigstoreSigned",

"keyPath": "kbs:///default/cosign-key/1"

}

]

}

}

}

Be sure to replace [REGISTRY_URL] with the desired registry URL of the

encrypted image.

Lastly, register the image pulling validation policy file with KBS storage:

mkdir -p ${KBS_DIR_PATH}/data/kbs-storage/default/security-policy

cp security-policy.json ${KBS_DIR_PATH}/data/kbs-storage/default/security-policy/test

See Also

Cosign-GitHub Integration

A good tutorial for cosign and github integration is here. The approach is automated and targets real-world usage. For example, this key-generation step automatically uploads the public key, private key, and key secret to the github repo:

$ GITHUB_TOKEN=ghp_... \

COSIGN_PASSWORD=just1testing2password3 \

cosign generate-key-pair github://<github_username>/<github_repo>

5 - Attestation

Before a confidential workload is granted access to sensitive data, it should be attested. Attestation provides guarantees about the TCB, isolation properties, and root of trust of the enclave.

Confidential Containers uses Trustee to verify attestations and conditionally release secrets. Trustee can be used to attest any confidential workloads. It is especially integrated with Confidential Containers.

Trustee should be deployed in a trusted environment, given that its role validating guests and releasing secrets is inherently sensitive.

There are several ways to configure and deploy Trustee.

Architecture

Trustee is a composition of a few different services, which can be deployed in several different configurations. This figure shows one common way to deploy these components in conjunction with certain guest components.

flowchart LR

AA -- attests guest ----> KBS

CDH -- requests resource --> KBS

subgraph Guest

CDH <.-> AA

end

subgraph Trustee

KBS -- validates evidence --> AS

RVPS -- provides reference values--> AS

end

client-tool -- configures --> KBS

Components

| Component | Name | Purpose |

|---|---|---|

| KBS | Key Broker Service | Facilitates attestation and conditionally releases secrets |

| AS | Attestation Service | Validates hardware evidence |

| RVPS | Reference Value Provider Service | Manages reference values |

| CDH | Confidential Data Hub | Handles confidential operations in the guest |

| AA | Attestation Agent | Gets hardware evidence in the guest |

KBS Protocol

Trustee and the guest components establish a secure channel in conjunction with attestation. This connection follows the KBS protocol, which is specified here

5.1 - CoCo Setup

If you are using Trustee with Confidential Containers, you’ll need to point your CoCo workload to your Trustee.

In your pod definition, add the following annotation.

io.katacontainers.config.hypervisor.kernel_params: "agent.aa_kbc_params=cc_kbc::http://<kbs-ip>:<kbs-port>"

The KBS IP will be the address of whatever system you run Trustee on in the next steps. Make sure this is accessible within your guest. Don’t use localhost.

By default the KBS port will be 8080. You can verify this in the next steps.

A full workload definition with the annotation might look like this.

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: nginx

annotations:

io.containerd.cri.runtime-handler: kata-qemu-coco-dev

io.katacontainers.config.hypervisor.kernel_params: "agent.aa_kbc_params=cc_kbc::http://<kbs-ip>:<kbs-port>"

spec:

containers:

- image: bitnami/nginx:1.22.0

name: nginx

dnsPolicy: ClusterFirst

runtimeClassName: kata-qemu-coco-dev

The Trustee address can also be configured via init data.

5.2 - Installation

Trustee can be deployed in several different configurations. Either way, Trustee should be deployed in a trusted environment. This could be a local server, some trusted third party, or even another enclave. Official support for deploying Trustee inside of Confidential Containers is being developed.

5.2.1 - Trustee Operator

Trustee can be installed on Kubernetes using the Trustee operator. When running Trustee in Kubernetes with the operator, the cluster must be Trusted.

Install the operator

The operator (release v0.3.0 at the time of writing) is available in the Operator Hub.

Please follow the installation steps detailed here.

Verify that the controller is running.

kubectl get pods -n operators --watch

The operator controller should be running.

NAME READY STATUS RESTARTS AGE

trustee-operator-controller-manager-77cb448dc-7vxck 1/1 Running 0 11m

How to override the Trustee image

First of all we need to know which Trustee image is running:

kubectl get csv -n operators trustee-operator.v0.3.0 -o json | jq '.spec.install.spec.deployments[0].spec.template.spec.containers[0].env[1].value'

"ghcr.io/confidential-containers/key-broker-service:built-in-as-v0.11.0"

The default image can be replaced with an updated version, for example Trustee v0.12.0:

NEW_IMAGE=ghcr.io/confidential-containers/key-broker-service:built-in-as-v0.12.0

kubectl patch csv -n operators trustee-operator.v0.3.0 --type='json' -p="[{'op': 'replace', 'path': '/spec/install/spec/deployments/0/spec/template/spec/containers/0/env/1/value', 'value':$NEW_IMAGE}]"

Deploy Trustee

An example on how to configure Trustee is provided in this blog.

After the last configuration step, check that the Trustee deployment is running.

kubectl get pods -n operators --selector=app=kbs

The Trustee deployment should be running.

NAME READY STATUS RESTARTS AGE

trustee-deployment-f97fb74d6-w5qsm 1/1 Running 0 25m

Uninstall

Remove the Trustee CRD.

CR_NAME=$(kubectl get kbsconfig -n operators -o=jsonpath='{.items[0].metadata.name}') && kubectl delete KbsConfig $CR_NAME -n operators

Remove the controller.

kubectl delete Subscription -n operators my-trustee-operator

kubectl delete csv -n operators trustee-operator.v0.3.0

5.2.2 - Trustee in Docker

Trustee can be installed using Docker Compose.

Installation

Clone the Trustee repo.

git clone https://github.com/confidential-containers/trustee.git

Setup authentication keys.

openssl genpkey -algorithm ed25519 > kbs/config/private.key

openssl pkey -in kbs/config/private.key -pubout -out kbs/config/public.pub

Run Trustee.

docker compose up -d

Uninstall

Stop Trustee.

docker compose down

5.3 - KBS Client Tool

Trustee can be configured using the KBS Client tool. Other parts of this documentation may assume that this tool is installed.

The KBS Client can also be used inside of an enclave to retrieve resources from Trustee, either for testing, or as part of a confidential workload that does not use Confidential Containers.

When using the KBS Client to retrieve resources, it must be built with an additional feature which is described below.

Generally, the KBS Client should not be used for retrieving secrets from inside a confidential container. Instead see, secret resources.

Install

The KBS Client tool can be installed in two ways.

With ORAS

Pull the KBS Client with ORAS.

oras pull ghcr.io/confidential-containers/staged-images/kbs-client:latest

chmod +x kbs-client

This version of the KBS Client does not support getting resources from inside of an enclave.

From source

Clone the Trustee repo.